What’s Changing in AI Security for 2026? 8 Critical Trends Every Organization Should Know

Introduction

Artificial intelligence has fundamentally changed how businesses operate, innovate, and compete in today's digital economy. From automating customer service to predicting market trends, AI systems have become the backbone of modern enterprise technology. However, this rapid adoption has created a critical vulnerability that many organizations are only beginning to understand: AI security.

Cybercriminals are exploiting AI systems in ways that traditional security measures simply cannot detect or prevent. They're poisoning training data, manipulating machine learning models, and using AI-powered tools to launch sophisticated attacks that adapt in real-time. Meanwhile, the AI systems that companies rely on daily can become weapons in the wrong hands, exposing sensitive data, compromising decision-making processes, and threatening business continuity.

AI security represents a fundamental shift in how we approach cybersecurity in an interconnected world where intelligent systems make critical decisions autonomously. Organizations face the dual challenge of defending their AI infrastructure from attacks while simultaneously leveraging AI technologies to strengthen their overall security posture.

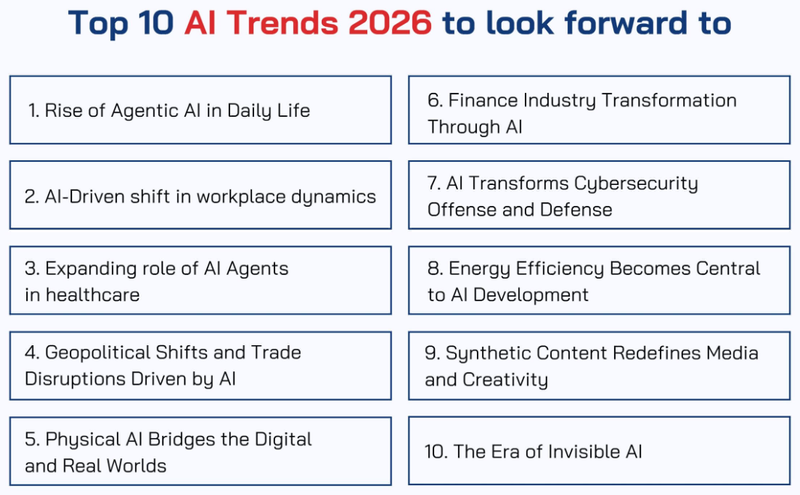

As we progress through 2026, business leaders and security professionals must understand the evolving AI security landscape. The threats are becoming more sophisticated, regulations are tightening, and the consequences of inadequate protection are severe. This guide examines the eight most critical AI security trends shaping this year, providing actionable insights that help organizations build resilient defenses while maintaining the innovation that AI enables. Understanding these trends is essential for organizational survival in an AI-driven future.

What is AI Security?

AI security encompasses two critical dimensions that organizations must understand and address simultaneously.

First, it involves protecting AI systems themselves from attacks, manipulation, and misuse. This includes safeguarding machine learning models from adversarial attacks, preventing data poisoning during training phases, ensuring model integrity, and protecting the infrastructure that runs AI applications. When attackers compromise an AI system, they can manipulate its outputs, steal proprietary algorithms, or use it as a backdoor into broader networks.

Second, AI security refers to using artificial intelligence technologies to enhance cybersecurity measures. Organizations deploy AI-powered tools to detect anomalies, identify threats in real-time, automate incident response, and predict potential vulnerabilities before exploitation occurs. These intelligent systems can process vast amounts of data far faster than human analysts, identifying patterns that might otherwise go unnoticed.

The intersection of these two dimensions creates a complex security ecosystem. Organizations must simultaneously defend their AI assets while leveraging AI capabilities to strengthen their overall security posture. This dual challenge requires specialized expertise, robust governance frameworks, and continuous adaptation to emerging threats.

Top 8 AI Security Trends for 2026

1. AI-Powered Threat Detection and Response

Artificial intelligence is revolutionizing how organizations identify and neutralize cyber threats. Traditional security tools rely on signature-based detection, which struggles against zero-day exploits and polymorphic malware. AI-powered systems analyze behavioral patterns, network traffic anomalies, and user activities to detect sophisticated threats that evade conventional defenses.

In 2026, we're seeing extended detection and response platforms leverage deep learning algorithms to correlate data across endpoints, networks, cloud environments, and applications. These systems learn normal baseline behaviors and instantly flag deviations that indicate potential compromises. The response time from detection to containment has shortened from hours or days to mere seconds, dramatically reducing the window of vulnerability.

Machine learning models now predict attack vectors before they're exploited, allowing security teams to patch vulnerabilities proactively. This predictive capability transforms cybersecurity from a reactive discipline into a proactive strategic function.

2. Adversarial AI and Deepfake Detection

The weaponization of AI has created a new category of threats. Cybercriminals use generative AI to create convincing phishing emails, deepfake videos impersonating executives, and synthetic identities that bypass authentication systems. These adversarial AI techniques are becoming more sophisticated and accessible, lowering the barrier for large-scale fraud.

Organizations are investing heavily in adversarial AI detection technologies. Advanced algorithms analyze subtle inconsistencies in deepfake media, microscopic facial movements, audio artifacts, and lighting irregularities that human observers miss. Multi-factor authentication systems now incorporate liveness detection and behavioral biometrics to prevent synthetic identity fraud.

The arms race between adversarial AI and detection systems intensifies daily. Companies that fail to implement robust deepfake detection risk financial fraud, reputational damage, and compromised decision-making based on manipulated information.

3. AI Model Security and Supply Chain Protection

As organizations increasingly rely on third-party AI models and pre-trained algorithms, supply chain security has emerged as a critical concern. Compromised models can contain hidden backdoors, poisoned training data, or embedded vulnerabilities that activate under specific conditions.

Model provenance tracking is becoming standard practice in 2026. Organizations maintain detailed records of where models originate, how they were trained, what data they consumed, and who has modified them. Blockchain-based verification systems ensure model integrity throughout the development lifecycle.

Security teams conduct adversarial testing on AI models before deployment, attempting to manipulate outputs through carefully crafted inputs. Model validation frameworks verify that algorithms perform as intended without hidden behaviors or biases that could be exploited. This rigorous vetting process is essential for maintaining trust in AI-driven operations.

4. Privacy-Preserving AI Technologies

Growing regulatory pressure and consumer awareness have accelerated the adoption of privacy-preserving AI techniques. Organizations need to extract insights from data while protecting individual privacy, a delicate balance that traditional approaches struggle to achieve.

Federated learning allows models to train across decentralized datasets without centralizing sensitive information. Healthcare organizations, for example, can collaboratively develop diagnostic AI without sharing patient records. Differential privacy techniques add mathematical noise to datasets, preventing the identification of individual records while maintaining statistical accuracy.

Homomorphic encryption enables computation on encrypted data, allowing AI models to process sensitive information without ever decrypting it. These technologies are transitioning from academic research to practical implementation, giving organizations powerful tools to comply with regulations like GDPR and CCPA while still leveraging AI capabilities.

5. AI Governance and Regulatory Compliance

The regulatory landscape surrounding AI is solidifying rapidly. Governments worldwide are implementing frameworks that mandate transparency, accountability, and ethical AI development. The European Union's AI Act, emerging US state regulations, and industry-specific requirements are reshaping how organizations develop and deploy AI systems.

AI governance frameworks in 2026 emphasize explainability, bias mitigation, and human oversight. Organizations establish AI ethics committees, conduct algorithmic impact assessments, and maintain audit trails documenting AI decision-making processes. These governance structures aren't just about compliance, they're risk management imperatives that protect organizations from legal liability and reputational harm.

Automated compliance monitoring tools assess AI systems against regulatory requirements, flagging potential violations before they become enforcement actions. This proactive governance approach transforms compliance from a checkbox exercise into a strategic advantage.

6. Quantum-Resistant AI Security

The approaching era of quantum computing poses existential threats to current encryption standards. Quantum computers will eventually break the cryptographic algorithms protecting AI models, training data, and communications between AI systems.

Forward-thinking organizations are implementing quantum-resistant cryptography now, before quantum computers achieve practical supremacy. Post-quantum cryptographic algorithms use mathematical problems that remain difficult even for quantum computers to solve. Migrating to these new standards requires significant planning, testing, and coordination across complex IT environments.

AI security architectures in 2026 incorporate crypto-agility, the ability to quickly swap cryptographic algorithms as quantum threats evolve. This flexibility ensures organizations can adapt to the quantum future without complete system overhauls.

7. AI-Driven Identity and Access Management

Traditional identity and access management systems struggle with the complexity and scale of modern enterprises. AI-enhanced IAM solutions continuously analyze user behavior, access patterns, and contextual factors to make intelligent authentication and authorization decisions.

Behavioral biometrics create unique user profiles based on typing patterns, mouse movements, and interaction habits. When behavior deviates from established baselines, AI systems can require additional authentication or restrict access to sensitive resources. This continuous verification approach replaces static credentials with dynamic risk assessment.

AI-powered privilege management automatically adjusts user permissions based on role changes, project involvement, and risk factors. Rather than relying on manual reviews and approvals, these systems ensure users have appropriate access precisely when needed, then revoke permissions when circumstances change.

8. Automated Vulnerability Management and Patch Orchestration

The volume and velocity of software vulnerabilities exceed human capacity to assess and remediate effectively. AI-driven vulnerability management platforms prioritize threats based on exploitability, business impact, and environmental context rather than generic severity scores.

These intelligent systems correlate vulnerability data with asset inventories, threat intelligence, and business context to determine which patches require immediate deployment. Automated patch orchestration tests updates in isolated environments, predicts compatibility issues, and deploys fixes across distributed infrastructure with minimal human intervention.

The result is dramatically reduced exposure windows. Organizations that once took weeks or months to patch critical vulnerabilities now respond within hours, substantially shrinking the opportunity for exploitation.

Benefits of AI Security

Implementing robust AI security practices delivers substantial advantages that extend far beyond threat mitigation. Organizations that embrace these technologies gain competitive advantages while protecting their assets.

Enhanced Threat Detection and Response Speed: AI processes massive data volumes in real-time, identifying threats that would overwhelm human analysts. Automated response systems contain breaches before they escalate, minimizing damage and reducing recovery costs. Organizations experience fewer successful attacks and shorter dwell times when breaches do occur.

Reduced Operational Costs: While AI security requires initial investment, automation dramatically reduces long-term operational expenses. Security teams handle larger environments without proportional staff increases. Automated workflows eliminate repetitive tasks, allowing skilled professionals to focus on strategic initiatives rather than routine monitoring.

Improved Accuracy and Reduced False Positives: Machine learning algorithms continuously refine threat detection, learning from past incidents to improve accuracy. Organizations waste less time investigating benign anomalies while genuine threats receive immediate attention. This precision increases security team efficiency and reduces alert fatigue.

Proactive Risk Management: Predictive analytics identify vulnerabilities and attack vectors before exploitation. Organizations shift from reactive firefighting to strategic risk mitigation, addressing weaknesses during development rather than after incidents. This proactive stance substantially reduces both likelihood and impact of security events.

Regulatory Compliance and Trust: Robust AI security demonstrates commitment to protecting customer data and maintaining system integrity. This builds trust with customers, partners, and regulators while simplifying compliance with evolving legal requirements. Organizations with strong AI security postures differentiate themselves in competitive markets.

Business Continuity and Resilience: AI security measures protect critical systems from disruption, ensuring operational continuity during attacks. Organizations recover faster from incidents, maintaining customer service and revenue streams. This resilience is invaluable in an environment where cyber attacks are increasingly inevitable.

How Can Companies Prepare For 2026?

Navigating the complex AI security landscape requires deliberate planning and sustained commitment. Organizations should take these concrete steps to strengthen their AI security posture.

Conduct Comprehensive Risk Assessments: Identify all AI systems within your organization, including shadow AI deployed without IT oversight. Evaluate the sensitivity of data these systems process, their integration with critical infrastructure, and potential consequences of compromise. Understanding your AI attack surface is the essential first step.

Develop AI Security Policies and Governance: Establish clear policies governing AI development, deployment, and operation. Create cross-functional governance committees that include security, legal, compliance, and business stakeholders. Define acceptable use cases, risk tolerance levels, and approval processes for AI initiatives.

Invest in Security Training: Ensure development teams understand secure AI coding practices, data scientists recognize adversarial attack vectors, and security professionals stay current with AI-specific threats. Create awareness programs that help all employees recognize AI-enabled attacks like deepfake phishing.

Implement Defense in Depth: Layer multiple security controls around AI systems rather than relying on single solutions. Combine network segmentation, access controls, encryption, monitoring, and incident response capabilities. This redundancy ensures that single point failures don't compromise entire systems.

Establish Third-Party Vetting Processes: Create rigorous evaluation frameworks for third-party AI tools, models, and services. Assess vendor security practices, model transparency, data handling procedures, and contractual protections. Never deploy external AI components without thorough security validation.

Build Incident Response Capabilities: Develop playbooks specifically addressing AI security incidents, including model poisoning, adversarial attacks, and data extraction. Conduct tabletop exercises simulating these scenarios to identify gaps in your response procedures. Ensure your security operations center has expertise in AI-specific threats.

Partner with AI Security Specialists: The complexity of AI security often exceeds internal capabilities. Collaborate with specialized firms that understand both AI technology and cybersecurity principles. These partnerships provide access to cutting-edge tools, threat intelligence, and expertise that would be prohibitively expensive to develop internally.

Ready to strengthen your organization's AI security posture? Regulance offers comprehensive AI security solutions tailored to your specific needs. Contact Regulance today to schedule a consultation and discover how we can secure your AI-driven future.

FAQs

What is the biggest AI security threat in 2026?

The most significant threat is the convergence of adversarial AI and social engineering. Cybercriminals leverage generative AI to create highly convincing deepfake content and personalized phishing campaigns at scale. These attacks exploit human psychology rather than technical vulnerabilities, making them particularly difficult to defend against through traditional security measures.

How much should companies budget for AI security?

AI security spending varies based on organization size, industry, and AI adoption maturity. Industry benchmarks suggest allocating 10-15% of IT budgets to cybersecurity, with an additional 15-20% of that cybersecurity budget specifically addressing AI security concerns. However, organizations heavily reliant on AI may need substantially higher investments.

Can small businesses afford AI security?

Absolutely. While enterprise-grade AI security platforms can be expensive, scalable solutions exist for organizations of all sizes. Cloud-based AI security services, managed security providers, and open-source tools offer cost-effective options. The key is prioritizing based on risk, protecting your most critical AI applications first, then expanding coverage as resources allow.

What skills do AI security professionals need?

Effective AI security professionals combine traditional cybersecurity expertise with data science knowledge. They understand machine learning algorithms, recognize adversarial attack techniques, and can secure AI infrastructure. Proficiency in Python, familiarity with AI frameworks like TensorFlow or PyTorch, and knowledge of AI governance principles are increasingly essential skills.

How is AI security different from traditional cybersecurity?

AI security addresses unique vulnerabilities that don't exist in traditional systems. Adversarial examples can manipulate AI models without compromising underlying infrastructure. Model inversion attacks extract training data from deployed models. These AI-specific threats require specialized detection techniques and mitigation strategies beyond conventional security tools.

Are AI security regulations coming?

Yes, comprehensive AI regulations are emerging globally. The EU's AI Act establishes risk-based requirements for AI systems. Various US states are implementing AI transparency and accountability laws. Industry-specific regulations in healthcare, finance, and other sectors increasingly address AI security. Organizations should prepare for expanding compliance obligations in coming years.

Conclusion

The AI security landscape in 2026 presents both extraordinary challenges and unprecedented opportunities. As artificial intelligence becomes deeply embedded in business operations, securing these systems is a strategic imperative that determines organizational resilience and competitive advantage.

The eight trends explored in this article represent the cutting edge of AI security, from sophisticated threat detection to quantum-resistant cryptography. Organizations that embrace these developments position themselves to harness AI's transformative potential while mitigating its inherent risks. Those that ignore these trends expose themselves to increasingly sophisticated attacks that can compromise data, disrupt operations, and damage reputations.

Preparing for the AI security challenges ahead requires commitment, investment, and expertise. It demands that organizations think differently about security, not as a barrier to innovation but as an enabler of responsible AI adoption. The companies that thrive in this environment will be those that integrate security into their AI strategies from the beginning, treating it as a foundational element rather than an afterthought.

The journey toward robust AI security is ongoing, and no organization can afford complacency. Threats evolve continuously, requiring constant vigilance and adaptation. But with the right strategies, tools, and partnerships, organizations can confidently navigate this complex landscape, protecting their assets while unlocking the full potential of artificial intelligence.

The future belongs to organizations that master the delicate balance between AI innovation and security. Start building that future today.