What Is Automation Bias and How Does It Affect Compliance?

In our increasingly evolving digital world, automation has become the backbone of countless industries, from healthcare and aviation to finance and manufacturing. While automated systems have revolutionized efficiency and accuracy, they've also introduced a subtle yet significant psychological phenomenon that affects decision-making across all sectors: automation bias. This cognitive tendency to over-rely on automated systems can have far-reaching consequences, potentially compromising safety, accuracy, and human judgment.

Understanding automation bias is a critical skill for anyone working with automated systems in today's technology-driven landscape. Whether you're a pilot trusting autopilot systems, a doctor relying on diagnostic software, or a financial analyst depending on algorithmic trading platforms, recognizing and addressing automation bias can mean the difference between optimal performance and costly mistakes.

What Is Automation Bias?

Automation bias refers to the human tendency to over-rely on automated systems and under-utilize or ignore contradictory information from non-automated sources. This cognitive bias manifests as an excessive trust in automated recommendations, alerts, and decisions, often at the expense of critical thinking and independent verification.

The term was first coined by researchers Linda Skitka, Kathleen Mosier, and Mark Burdick in the 1990s during their studies of pilot decision-making in automated cockpits. They observed that pilots would sometimes blindly follow automated system recommendations even when other indicators suggested different courses of action. This phenomenon extends far beyond aviation, affecting virtually every field where humans interact with automated systems.

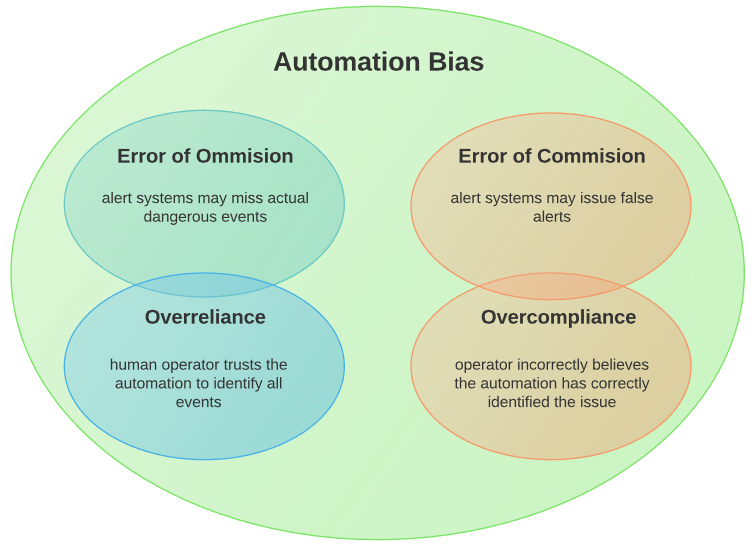

Automation bias operates on two primary levels:

Commission Errors: These occur when individuals act on automated recommendations without proper verification, leading to incorrect actions based on faulty automated advice. For example, a doctor might prescribe medication based solely on an automated diagnostic suggestion without considering the patient's complete medical history.

Omission Errors: These happen when people fail to take necessary action because automated systems don't prompt them to do so. A classic example is when monitoring systems fail to alert operators to developing problems, and human operators don't independently verify system status.

The bias is particularly dangerous because it can create a false sense of security. When automated systems work correctly most of the time, users develop increasing confidence in their reliability, making them more likely to ignore potential warning signs when systems malfunction or provide incorrect information.

How Automation Bias Works

Understanding the psychological mechanisms behind automation bias reveals why this phenomenon is so pervasive and persistent. The bias operates through several interconnected cognitive processes that have evolved to help humans manage complex information environments, but can become problematic in automated contexts.

Cognitive Load Reduction

Human cognitive capacity is limited, and automated systems promise to reduce the mental effort required to process complex information. When faced with overwhelming data streams or time-pressured decisions, people naturally gravitate toward automated assistance. This reliance becomes problematic when individuals begin to use automation as a substitute for, rather than a supplement to, critical thinking.

Trust Calibration Issues

Trust in automation develops through experience, but this calibration process is often imperfect. Positive experiences with automated systems create increasing confidence, while negative experiences may be rationalized as isolated incidents or user errors. This asymmetric learning process leads to overconfidence in automated capabilities.

Attention Allocation Problems

Automation can create what researchers call "attention tunneling," where users focus narrowly on automated outputs while neglecting other relevant information sources. This selective attention is reinforced by the design of many automated systems, which present information in ways that draw focus away from alternative data sources.

Skill Degradation

Regular reliance on automation can lead to the atrophy of manual skills and independent decision-making capabilities. Pilots who depend heavily on autopilot systems may lose proficiency in manual flying skills, while medical professionals who rely extensively on diagnostic software might see their clinical reasoning abilities diminish over time.

Confirmation Bias Amplification

Automation bias often works in conjunction with confirmation bias; the tendency to seek information that confirms existing beliefs. When automated systems provide recommendations that align with initial impressions, users are less likely to seek contradictory evidence or question the automated advice.

The temporal dynamics of automation bias are also important to understand. The bias tends to strengthen over time as users become more familiar with automated systems and experience their general reliability. This creates a dangerous feedback loop where increasing reliance on automation reduces opportunities to develop the skills needed to detect and correct automated errors.

How to Overcome Automation Bias in Compliance

Addressing automation bias requires a multi-faceted approach that combines individual awareness, organizational policies, and system design improvements. The goal isn't to eliminate the use of automated systems, which would be neither practical nor beneficial; but to optimize the human-automation partnership.

Individual Strategies

Develop Critical Thinking Habits: Establish routines for questioning automated recommendations. Before accepting automated advice, ask yourself: "What additional information would I need to verify this recommendation?" and "What alternative explanations or solutions might exist?"

Practice Active Monitoring: Rather than passively accepting automated outputs, actively engage with the system by regularly checking inputs, questioning logic, and verifying results through independent means. This approach helps maintain situational awareness and keeps cognitive skills sharp.

AI and Humans in the Loop: Review AI results and ensure it aligns with the organization's processes and reality. Develop checklists and verification procedures that require you to confirm critical automated recommendations through independent analysis or additional data sources.

Seek Diverse Information Sources: Deliberately consult multiple information sources beyond automated systems. This practice helps identify discrepancies and provides a broader perspective on complex situations.

Organizational Approaches

Training and Education: Organizations should provide comprehensive training on automation bias, including real-world examples and hands-on exercises that demonstrate how the bias can lead to errors. This training should be ongoing, not just a one-time event.

Policy Development: Establish clear policies that require human verification of critical automated decisions. These policies should specify when manual override is appropriate and create accountability mechanisms for decision-making processes.

Cultural Change: Foster organizational cultures that value questioning and critical thinking over blind compliance with automated systems. Encourage employees to report concerns about automated recommendations without fear of retribution.

Regular System Audits: Conduct periodic reviews of automated system performance and human-automation interactions to identify patterns of over-reliance or instances where automation bias may have contributed to errors.

System Design Improvements

Transparency Features: Design automated systems that clearly explain their reasoning processes and highlight uncertainties in their recommendations. Users should understand how systems reach conclusions and what limitations exist.

Appropriate Alerts: Implement alert systems that draw attention to situations where automated recommendations may be unreliable or where additional human verification is especially important.

Forced Verification: Build in mandatory checkpoints that require users to explicitly confirm they have considered alternative information sources before accepting automated recommendations for critical decisions.

Progressive Disclosure: Present information in layers, starting with automated recommendations but making it easy to access underlying data and alternative analyses that support independent verification.

How Automation Bias Affects Compliance with Practical Examples

In regulatory environments across industries, automation bias poses significant challenges to compliance efforts. The intersection of automated systems and regulatory requirements creates complex scenarios where over-reliance on automation can lead to serious compliance violations.

Financial Services Compliance

In fintechs, automated compliance monitoring systems scan transactions for suspicious patterns, regulatory violations, and risk indicators. However, automation bias can lead compliance officers to over-rely on these systems without conducting adequate independent analysis. This is particularly problematic because:

- Regulatory requirements often involve nuanced interpretations that automated systems may not capture

- False negatives from automated systems can result in missed violations

- Regulators expect human oversight and independent verification of compliance decisions

For example, anti-money laundering (AML) systems might flag certain transactions as suspicious while missing others that require human insight to identify. Compliance officers experiencing automation bias might focus exclusively on flagged transactions while neglecting broader pattern analysis that could reveal more sophisticated schemes.

Healthcare Compliance

Healthcare organizations face stringent regulations regarding patient safety, data privacy, and treatment protocols. Automated systems support compliance in areas like:

- Electronic health record (EHR) audit trails

- Drug interaction checking

- Clinical decision support

- HIPAA compliance monitoring

However, automation bias in healthcare compliance can have serious consequences. Medical professionals might rely too heavily on automated drug interaction checkers without considering patient-specific factors, or assume that EHR systems adequately protect patient data without implementing additional security measures.

Aviation Safety Compliance

The aviation industry has extensive experience with automation bias in safety-critical compliance scenarios. Pilots must comply with numerous regulations regarding flight operations, maintenance requirements, and safety procedures. Automated flight management systems and maintenance tracking software support these compliance efforts, but over-reliance can lead to:

- Failure to identify equipment problems not detected by automated systems

- Inadequate response to unusual situations not covered by automated procedures

- Reduced situational awareness during critical flight phases

Manufacturing and Environmental Compliance

Manufacturing facilities use automated monitoring systems to ensure compliance with environmental regulations, safety standards, and quality requirements. Automation bias in these contexts can result in:

- Missed environmental violations when monitoring systems malfunction

- Inadequate response to safety hazards not detected by automated sensors

- Quality control failures when human inspectors defer too heavily to automated testing

Regulatory Response and Best Practices

Regulatory bodies increasingly recognize the risks associated with automation bias and are developing guidance to address these challenges:

Documentation Requirements: Many regulators now require organizations to document their human oversight processes and demonstrate that automated systems are subject to appropriate human verification.

Training Mandates: Some industries have implemented mandatory training on automation bias and human factors as part of compliance programs.

Audit Focus: Regulatory examinations increasingly focus on how organizations balance automation with human judgment in compliance-critical decisions.

Risk Management Integration: Regulators expect organizations to identify automation bias as a specific risk factor in their compliance risk assessments and implement appropriate controls.

Key Differences Between Automation Bias and Machine Bias

While automation bias and machine bias are related concepts, they represent fundamentally different phenomena that require distinct approaches to address. Understanding these differences is crucial for developing effective strategies to manage both types of bias.

Automation Bias: Human-Centered Phenomenon

Automation bias originates in human psychology and decision-making processes. Key characteristics include:

Source: Human cognitive limitations and trust calibration issues Manifestation: Over-reliance on automated recommendations regardless of system accuracy Scope: Affects all interactions between humans and automated systems Solution Focus: Training, awareness, and procedural changes for human users Timeline: Develops over time through repeated interactions with automated systems

Automation bias is fundamentally about human behavior and can occur even when automated systems are functioning perfectly. A radiologist might exhibit automation bias by accepting an AI diagnostic suggestion without adequate independent analysis, even if the AI system is highly accurate.

Machine Bias: System-Centered Phenomenon

Machine bias refers to systematic errors or prejudices embedded in automated systems themselves. Key characteristics include:

Source: Biased training data, flawed algorithms, or incomplete system design Manifestation: Consistently unfair or inaccurate outputs from automated systems Scope: Affects system outputs regardless of human intervention Solution Focus: Algorithm improvement, data quality enhancement, and system redesign Timeline: Present from system deployment and consistent until addressed through technical changes

Machine bias represents flaws in the automated systems themselves. For example, a hiring algorithm that systematically discriminates against certain demographic groups exhibits machine bias regardless of how humans use its recommendations.

Interaction Between the Two Biases

In real-world scenarios, automation bias and machine bias often interact in complex ways:

Amplification Effect: Automation bias can amplify the impact of machine bias by causing humans to accept discriminatory or inaccurate automated recommendations without question.

Masking Effect: Strong automation bias might prevent humans from detecting machine bias, as people become less likely to notice patterns of systematic errors in automated outputs.

Compound Risk: Organizations face compound risks when both biases are present, as technical flaws in systems combine with human over-reliance to create particularly dangerous scenarios.

Detection and Mitigation Strategies

For Automation Bias:

- Focus on human training and awareness programs

- Implement procedural safeguards requiring independent verification

- Design interfaces that encourage critical thinking

- Monitor patterns of human-automation interaction

For Machine Bias:

- Conduct algorithmic audits and bias testing

- Improve training data quality and representativeness

- Implement technical solutions like bias correction algorithms

- Establish ongoing monitoring of system outputs for discriminatory patterns

For Both:

- Create interdisciplinary teams combining technical and human factors expertise

- Establish comprehensive testing protocols that examine both technical performance and human-system interaction

- Develop governance frameworks that address both technical and behavioral aspects of bias

Frequently Asked Questions

What industries are most affected by automation bias?

Automation bias affects virtually every industry that uses automated systems, but some sectors face particularly high risks due to the critical nature of their decisions. Healthcare professionals relying on diagnostic systems, financial analysts using algorithmic trading platforms, air traffic controllers managing automated flight systems, and cybersecurity specialists depending on threat detection software all face significant automation bias challenges. The common thread is that these industries combine high-stakes decision-making with complex automated systems where errors can have serious consequences.

Can automation bias be completely eliminated?

Complete elimination of automation bias is neither realistic nor necessarily desirable. The bias exists because automated systems genuinely provide valuable assistance in managing complex information and making difficult decisions. The goal should be optimization rather than elimination - finding the right balance between leveraging automation benefits and maintaining human judgment. Effective management involves developing awareness, implementing verification procedures, and designing systems that support rather than replace critical thinking.

How quickly does automation bias develop?

Automation bias typically develops gradually through repeated positive experiences with automated systems. Research suggests that the bias can begin forming within weeks of first using an automated system, with strength increasing over months and years of continued use. The development speed varies based on factors including system reliability, user experience level, and the complexity of tasks being automated. Organizations should begin bias prevention training from the moment employees first interact with automated systems.

What role does system design play in automation bias?

System design significantly influences the development and severity of automation bias. Well-designed systems include features that encourage critical thinking, such as confidence indicators, alternative recommendations, and transparent reasoning processes. Poor design choices like presenting automated recommendations as definitive answers or making it difficult to access underlying data can accelerate bias development. The most effective systems are designed as decision support tools rather than decision replacement systems.

How do you measure automation bias in an organization?

Measuring automation bias requires a combination of quantitative and qualitative assessment methods. Organizations can track metrics such as override rates (how often humans reject automated recommendations), decision accuracy in situations where automation and human judgment disagree, and response times for manual verification procedures. Qualitative measures include surveys about trust in automated systems, interviews exploring decision-making processes, and observational studies of human-automation interactions. Regular assessment helps organizations identify bias trends and adjust training or system design accordingly.

What's the difference between healthy reliance and automation bias?

Healthy reliance on automation involves using automated systems as sophisticated tools while maintaining independent judgment and verification capabilities. Users understand system limitations, regularly verify critical recommendations, and remain prepared to override automated advice when appropriate. Automation bias crosses the line into unhealthy territory when users stop questioning automated recommendations, lose the ability to function without automated assistance, or ignore contradictory information from other sources. The key distinction is maintaining active engagement with the decision-making process rather than becoming a passive recipient of automated recommendations.

Are some people more susceptible to automation bias than others?

Research indicates that individual susceptibility to automation bias varies based on several factors. People with less experience in a particular domain may be more susceptible because they lack the background knowledge needed to evaluate automated recommendations critically. Conversely, experts might develop automation bias through overconfidence in their ability to detect system errors. Personality traits such as trust propensity and cognitive flexibility also influence bias susceptibility. Understanding these individual differences helps organizations tailor training and develop personalized strategies for bias prevention.

Conclusion

Automation bias represents one of the most significant challenges in our increasingly automated world. As artificial intelligence and automated systems become more sophisticated and ubiquitous, understanding and managing this cognitive bias becomes not just important, but essential for maintaining human agency and decision-making quality across all sectors of society.

The key insight from our exploration of automation bias is that it's not simply a matter of humans being "lazy" or "over-trusting" of technology. Rather, it's a natural cognitive response to the complexity of modern information environments and the genuine benefits that automated systems provide. The bias develops because automation often works well, creating positive feedback loops that gradually erode critical thinking skills and independent verification habits.

However, recognition of automation bias shouldn't lead to rejection of automated systems. These tools have revolutionized industries, saved countless lives, and enabled unprecedented levels of efficiency and accuracy in complex tasks. The goal is not to eliminate automation but to optimize the partnership between human intelligence and automated capabilities.

Successful management of automation bias requires commitment at multiple levels. Individuals must develop awareness of their own susceptibility to the bias and cultivate habits of critical thinking and independent verification. Organizations must create cultures that value questioning and provide training and policies that support balanced human-automation interaction. System designers must build tools that encourage rather than discourage human engagement with the decision-making process.

The compliance implications of automation bias are particularly significant in our highly regulated business environment. Organizations that fail to address automation bias risk not only operational errors but also regulatory violations and legal consequences. The most successful organizations will be those that recognize automation bias as a specific risk factor and implement comprehensive strategies to manage it.

Looking forward, the challenge of automation bias will likely intensify as automated systems become more sophisticated and harder to understand. Advanced AI systems that use machine learning algorithms can be particularly challenging because their decision-making processes may be opaque even to their creators. This trend makes it even more critical that we develop robust frameworks for maintaining human oversight and critical thinking in automated environments.

The distinction between automation bias and machine bias also highlights the need for comprehensive approaches that address both human and technical factors. Organizations cannot solve automation bias purely through better technology, nor can they address machine bias purely through human training. Both challenges require integrated solutions that combine technical excellence with human factors expertise.

Ultimately, the goal is to create symbiotic relationships between humans and automated systems where each party contributes their unique strengths. Humans bring creativity, contextual understanding, ethical reasoning, and the ability to handle novel situations. Automated systems provide computational power, pattern recognition capabilities, and the ability to process vast amounts of data quickly and consistently.

By understanding automation bias and implementing strategies to manage it, we can harness the full potential of automated systems while preserving the critical thinking and independent judgment that remain uniquely human. This balanced approach will be essential as we navigate an increasingly automated future where the quality of human-machine collaboration will determine success across all aspects of society.

The journey toward optimal human-automation interaction is ongoing, and our understanding of automation bias will continue to evolve. What remains constant is the need for vigilance, awareness, and commitment to maintaining the human element in an automated world. Through continued research, training, and thoughtful system design, we can ensure that automation enhances rather than replaces human intelligence, creating a future where technology serves humanity's highest aspirations.

Tackle automation bias before it derails compliance. Partner with Regulance to ensure smarter, unbiased decision-making.