What Does the EU AI Act Really Mean for Business and How Can You Stay Ahead?

Introduction

Artificial intelligence is no longer science fiction. It's in our smartphones, our hospitals, our hiring processes, and our financial systems. But as AI becomes more powerful and pervasive, a critical question emerges: who's making sure it's safe, fair, and trustworthy?

The EU AI Act is the world's first comprehensive legal framework for artificial intelligence. This landmark regulation represents a watershed moment in tech governance, setting the standard for how democracies can regulate transformative technologies without stifling innovation. It doesn't matter if you're a startup founder in Silicon Valley, a compliance officer in Frankfurt, or a product manager in Singapore, the EU AI Act will likely affect how you develop, deploy, and use AI systems.

The EU AI Act is reshaping the global AI landscape, much like the General Data Protection Regulation (GDPR) did for data privacy. Companies worldwide are scrambling to understand its implications, and for good reason. Non-compliance could mean fines of up to €35 million or 7% of global annual turnover, whichever is higher.

In this comprehensive guide, we'll break down everything you need to know about the EU AI Act: what it is, who it affects, why it matters to your business, and how you can prepare for compliance.

What Is the EU AI Act? Understanding Europe's Revolutionary AI Legislation

The EU AI Act is the European Union's regulation on artificial intelligence, designed to ensure that AI systems used within the EU market are safe, transparent, and respect fundamental rights. Officially adopted in March 2024, with enforcement beginning in phases from 2025 through 2027, this regulation establishes a unified legal framework across all 27 EU member states.

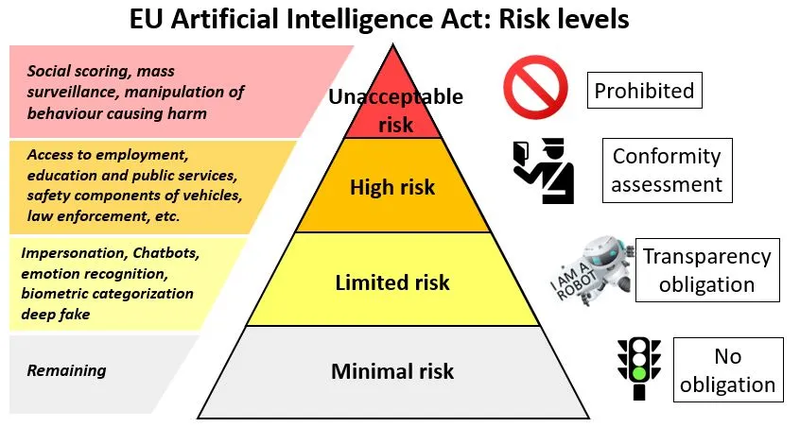

The Risk-Based Approach: Not All AI Is Created Equal

What makes the EU AI Act unique is its risk-based methodology. Rather than treating all AI systems the same way, the regulation categorizes them based on the level of risk they pose to people's safety and fundamental rights. Think of it as a traffic light system for AI:

Unacceptable Risk (Red Light - Prohibited)

These AI systems are banned outright because they pose fundamental threats to human rights and safety. This category includes:

- Social scoring systems by governments that evaluate or classify people based on their social behavior or personal characteristics

- Real-time remote biometric identification in publicly accessible spaces for law enforcement purposes, with narrow exceptions for serious crimes

- AI systems that exploit vulnerabilities of specific groups, such as children or people with disabilities

- Subliminal techniques that manipulate people's behavior in harmful ways

High Risk (Yellow Light - Strictly Regulated)

High-risk AI systems can be used but face stringent requirements before they can enter the EU market. These systems are used in areas where mistakes could seriously harm people's lives, including:

- Critical infrastructure management that could endanger lives or health

- Educational and vocational training systems that determine access to education or career paths

- Employment tools for recruitment, hiring, and workforce management decisions

- Essential services like creditworthiness assessments and emergency response dispatch

- Law enforcement applications for evaluating evidence reliability or predicting crimes

- Migration and border control systems

- Administration of justice and democratic processes

High-risk AI systems must meet strict obligations: rigorous testing, comprehensive documentation, human oversight, robust data governance, and transparency about their capabilities and limitations.

Limited Risk (Green Light - Transparency Requirements)

These AI systems pose minimal risk but must be transparent. Users need to know they're interacting with AI. This includes:

- Chatbots and conversational AI systems

- Emotion recognition technology

- Biometric categorization systems

- AI-generated content (deepfakes, synthetic media)

For limited-risk systems, the primary obligation is disclosure. People must be informed when they're interacting with AI or viewing AI-generated content.

Minimal Risk (Clear - No Specific Requirements)

The vast majority of AI applications fall into this category and face no additional obligations under the Act. This includes AI-enabled video games, spam filters, and inventory management systems.

General Purpose AI: Special Rules for Foundation Models

The EU AI Act also addresses general-purpose AI (GPAI) models, including large language models and foundation models like GPT-4, Claude, or Gemini. These powerful systems receive special attention because they can be adapted for countless applications.

GPAI providers must maintain technical documentation, comply with copyright law, publish detailed summaries of training data, and undergo additional obligations if their models pose systemic risk due to high-impact capabilities.

Who Does the EU AI Act Apply To?

One of the most common misconceptions about the EU AI Act is that it only applies to European companies. Wrong. This regulation has extraterritorial reach, meaning it can affect businesses anywhere in the world.

The Three Key Criteria for Applicability

The EU AI Act applies to you if any of the following conditions are met:

You're a Provider Placing AI Systems on the EU Market

If you develop or have an AI system developed with the intent to make it available in the EU market under your name or trademark, you're a provider under the Act. Your physical location doesn't matter. A company in San Francisco offering an AI recruitment tool to businesses in Germany must comply with the EU AI Act.

You're a Deployer Using AI Systems in the EU

Deployers are organizations that use AI systems within the EU, even if they didn't develop them. If your London-based company purchases and implements a high-risk AI system for your Paris office, you have compliance obligations as a deployer.

The AI System's Output Is Used in the EU

Even if you're based outside the EU and your customers are outside the EU, if your AI system produces outputs that are used within the Union, the Act may apply. This creates a ripple effect across global supply chains.

Who Exactly Needs to Worry?

Let's get specific about which organizations should be paying attention:

Technology Companies and AI Developers

If you're building AI products or services, this is your primary concern. Whether you're a Silicon Valley giant or a bootstrapped startup in Bangalore, the moment you want EU customers, you're in scope.

Enterprises Using AI Systems

Large corporations deploying AI for HR, customer service, credit decisions, or operational efficiency need to verify their systems comply with the Act. This includes multinational corporations with EU operations and EU-based companies using AI from international vendors.

SMEs and Startups

Small and medium enterprises aren't exempt, but the regulation does include provisions to reduce the burden on smaller organizations, including priority access to regulatory sandboxes and standardized documentation templates.

Importers and Distributors

Companies that distribute or import AI systems into the EU have specific responsibilities to ensure products bear required conformity markings and that providers have fulfilled their obligations.

Public Sector Organizations

Government agencies and public bodies deploying AI systems for public services, law enforcement, or administrative decisions face particularly stringent requirements given the fundamental rights implications.

What About AI Systems Developed In-House?

The EU AI Act applies to custom-built AI systems developed internally, especially if they fall into high-risk categories. Your proprietary recruitment algorithm or fraud detection system isn't exempt just because it's not commercially available.

Why the EU AI Act Matters to Your Business: Risks, Opportunities, and Strategic Imperatives

The EU AI Act will fundamentally reshape how you develop products, serve customers, manage risks, and compete in the global marketplace.

The Compliance Imperative: Consequences of Getting It Wrong

Let's start with the most immediate concern: penalties. The EU AI Act comes with teeth. Violations can result in administrative fines of up to €35 million or 7% of total worldwide annual turnover from the preceding financial year, whichever is higher. For context, 7% of Amazon's 2023 revenue would be approximately $37 billion.

The fines are tiered based on the severity of the violation. Prohibited AI practices carry the maximum penalty. Non-compliance with other obligations brings fines up to €15 million or 3% of global annual turnover. Even providing incorrect or incomplete information to authorities can cost up to €7.5 million or 1% of turnover.

Non-compliant AI systems cannot be placed on the EU market, meaning you lose access to 450 million potential customers and one of the world's largest economies. Reputational damage from high-profile compliance failures can devastate brand trust, especially in an era where consumers increasingly care about ethical AI.

Market Access and Competitive Advantage

Early compliance creates competitive advantage. Companies that proactively align with the EU AI Act can market their systems as trustworthy, transparent, and rights-respecting. This becomes a powerful differentiator when customers increasingly demand ethical AI.

Compliance also future-proofs your business. The EU AI Act is likely the first of many such regulations worldwide. Countries like Brazil, Canada, and various US states are developing similar frameworks, often using the EU model as inspiration. Invest in compliance now, and you're building capabilities that will serve you globally for years to come.

Operational and Innovation Impacts

The EU AI Act will touch nearly every aspect of how you develop and deploy AI systems.

Development Processes Must Change

High-risk AI system development requires extensive documentation, risk assessment, and testing. You'll need to establish quality management systems, conduct conformity assessments, and maintain technical documentation for at least ten years. This isn't a one-time project; it's an ongoing operational commitment.

Data Governance Becomes Critical

Training data for high-risk AI systems must meet strict quality standards. You need to examine data for biases, ensure appropriate data governance, and document data provenance. For many organizations, this means overhauling existing data practices.

Human Oversight Is Mandatory

High-risk systems must be designed to enable effective human oversight. This means your AI can't be a black box. Humans need to understand outputs, intervene when necessary, and override decisions. Your system architecture must support this from the ground up.

Transparency and Explainability

Users and affected individuals have rights to explanation about AI decisions that significantly affect them. Your systems need to be interpretable, and you need mechanisms to provide meaningful information about how decisions are made.

Supply Chain and Vendor Management

If you're using third-party AI systems, you can't simply trust that vendors are compliant. As a deployer, you have due diligence obligations. This means scrutinizing contracts, demanding compliance documentation, and potentially reassessing your entire AI vendor ecosystem.

For companies selling AI systems, customer questions about EU AI Act compliance will become standard in procurement processes. Your ability to quickly and comprehensively demonstrate compliance will directly impact sales cycles and close rates.

Innovation Within Guardrails

Some worry the EU AI Act will stifle innovation.Compliance adds complexity and cost. But it also channels innovation toward more responsible, trustworthy AI that ultimately has greater market acceptance and longevity.

The regulation includes provisions specifically designed to support innovation, including regulatory sandboxes where companies can test AI systems under regulatory supervision, real-world testing opportunities, and support measures for SMEs and startups.

Frequently Asked Questions About the EU AI Act

When does the EU AI Act come into force?

The EU AI Act was adopted in March 2024 and is being phased in gradually. Prohibited AI practices became enforceable in February 2025. Rules for general-purpose AI models apply from August 2025. The core requirements for high-risk systems take effect in August 2026, with some existing systems granted until August 2027 for full compliance. This phased approach gives businesses time to prepare, but the clock is ticking.

What's the difference between the EU AI Act and GDPR?

While both are EU regulations protecting fundamental rights, they address different issues. GDPR focuses on personal data processing and privacy rights. The EU AI Act regulates AI systems based on their risk level, addressing safety, transparency, and broader fundamental rights beyond privacy. However, they intersect significantly. AI systems that process personal data must comply with both regulations, and many AI Act requirements reinforce GDPR principles like transparency and purpose limitation.

Do I need to appoint someone responsible for AI compliance?

For high-risk AI systems, deployers must assign human oversight responsibilities to individuals with the necessary competence, training, and authority. Many organizations are establishing AI governance committees, appointing Chief AI Officers, or designating compliance teams. Even if not legally required for your specific systems, having clear accountability for AI Act compliance is a best practice that demonstrates due diligence.

What are regulatory sandboxes and can my company participate?

Regulatory sandboxes are controlled environments where companies can test innovative AI systems under regulatory supervision before full market deployment. They provide legal certainty, reduce compliance costs, and offer direct feedback from regulators. EU member states are required to establish these sandboxes, prioritizing SMEs and startups. If you're developing novel AI applications, particularly in high-risk categories, sandboxes offer valuable opportunities to validate your compliance approach.

How does the EU AI Act affect open-source AI?

The Act includes provisions recognizing the specific characteristics of open-source models. Providers of free and open-source GPAI models are exempt from certain transparency obligations unless their models pose systemic risk. However, if open-source components are integrated into commercial AI systems, particularly high-risk ones, the organization deploying that system must ensure overall compliance. The open-source community is actively developing resources to help contributors understand and support compliance.

What if my AI vendor is non-compliant?

As a deployer, you cannot simply defer responsibility to vendors. You must conduct due diligence to verify that high-risk AI systems you use comply with the Act. This includes reviewing conformity documentation, ensuring proper CE marking, and monitoring system performance. If you discover non-compliance, you must stop using the system until issues are resolved and report serious incidents to authorities. This makes vendor selection and contract terms critically important.

Are there specific requirements for AI-generated content?

Yes. AI systems that generate or manipulate image, audio, or video content resembling real persons, places, or events must mark this content as artificially generated or manipulated in a machine-readable format and detectable as such. This addresses deepfakes and synthetic media concerns. Users must be informed they're interacting with AI systems unless it's obvious from context. These transparency requirements apply regardless of risk classification.

How should small businesses with limited resources approach compliance?

The EU AI Act includes specific support measures for SMEs, including simplified documentation requirements, priority access to regulatory sandboxes, reduced fees for conformity assessments, and guidance materials. Start by inventorying your AI systems and classifying them by risk level. Most will likely be minimal or limited risk with light compliance burdens. For high-risk systems, consider whether you truly need them or if alternative solutions exist. Collaborate with industry associations that are developing shared resources and best practices.

What happens if AI regulations conflict between jurisdictions?

As AI regulation proliferates globally, navigating conflicting requirements will become more complex. The EU AI Act represents one of the most comprehensive frameworks, and many jurisdictions are aligning with it to reduce fragmentation. However, differences will exist. Companies operating internationally need robust AI governance frameworks flexible enough to accommodate varying requirements. Building to the highest standard, often the EU AI Act, generally ensures compliance with less stringent regulations elsewhere.

Will the EU AI Act requirements change over time?

Yes. The Act includes mechanisms for updating its provisions as technology evolves. The European Commission can adopt delegated acts to modify technical details, high-risk classifications, and prohibited practices based on technological developments and emerging risks. Codes of conduct and harmonized standards will also evolve. This means compliance isn't a one-time project but an ongoing process requiring continuous monitoring of regulatory developments and adaptation of systems and processes accordingly.

Conclusion

The EU AI Act represents a fundamental shift in how artificial intelligence is governed, marking the transition from the "Wild West" of unregulated AI to an era of accountability, transparency, and rights-based technology development. This isn't a distant future concern. It's happening now, with enforcement already underway and major provisions taking effect throughout 2025 and 2026.

For businesses, the path forward is clear: understand the regulation, assess your AI systems against its requirements, implement necessary compliance measures, and embed AI governance into your organizational culture. This is not optional and it doesn’t matter if you're a multinational corporation or a growing startup, the EU AI Act will shape your ability to innovate, compete, and access one of the world's most valuable markets.

The complexity of the EU AI Act shouldn't be underestimated. From risk classification to conformity assessments, from data governance to human oversight requirements, comprehensive compliance demands expertise, resources, and systematic approaches. Many organizations are discovering they need specialized support to navigate these requirements effectively.

Ready to future-proof your AI systems?. Contact Regulance today and ensure your AI-powered future is compliant, competitive, and built to last.