What Are the EU AI Act’s Key Requirements and What’s the Smartest Way to Address Them?

Introduction

The European Union has taken a groundbreaking step in regulating artificial intelligence with the introduction of the EU AI Act. As organizations worldwide grapple with the ethical implications and potential risks of AI technology, Europe has positioned itself at the forefront of AI governance by establishing the world's first comprehensive legal framework for artificial intelligence. This landmark legislation represents a paradigm shift in how we approach AI development, deployment, and oversight, setting a global precedent that will likely influence AI regulation across continents.

The journey toward the EU AI Act began in April 2021 when the European Commission first proposed this revolutionary framework. After years of intense negotiations and deliberations, the act was officially published in July 2024 and entered into force in August of the same year. What makes this legislation particularly significant is its risk-based approach, which categorizes AI systems according to the level of threat they pose to safety, fundamental rights, and society at large. This nuanced strategy acknowledges that while AI presents tremendous opportunities for innovation and progress, it also carries inherent risks that must be carefully managed and mitigated.

What Is the EU AI Act?

The EU AI Act is the world's first comprehensive legal framework specifically designed to address AI risks while positioning Europe as a global leader in responsible AI development. At its core, the legislation aims to foster trustworthy AI systems that Europeans can confidently embrace, ensuring these technologies serve humanity while protecting fundamental rights, democracy, and the rule of law.

The act defines four distinct risk levels for AI systems: unacceptable risk (prohibited), high risk (strictly regulated), limited risk (transparency obligations), and minimal risk (unregulated). This tiered classification system enables proportional regulation, ensuring that stricter requirements apply only where necessary while allowing innovation to flourish in lower-risk applications.

The territorial scope of the EU AI Act extends far beyond European borders. Similar to how the General Data Protection Regulation (GDPR) established global standards for data privacy, the AI Act applies to any organization deploying AI systems that affect users within the EU, regardless of where the company is located. This extraterritorial reach means that businesses worldwide must understand and comply with these requirements if they wish to operate in the European market.

The legislation encompasses various actors throughout the AI lifecycle, including providers (developers), deployers (users), importers, distributors, and modifiers. Each role carries specific responsibilities and obligations under the act, creating a comprehensive accountability framework that ensures oversight at every stage of an AI system's development and deployment.

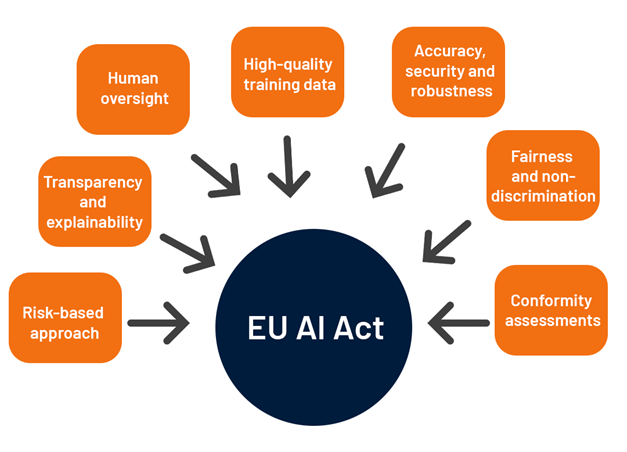

What Are the Key Requirements of the EU AI Act?

The EU AI Act establishes a comprehensive set of requirements that vary based on the risk classification of AI systems. Understanding these obligations is essential for organizations operating in or serving the European market.

Prohibited AI Practices

The act completely bans AI systems employing subliminal, manipulative, or deceptive techniques that distort behavior and impair informed decision-making, causing significant harm. This includes cognitive behavioral manipulation, exploitation of vulnerabilities in specific groups, social scoring systems by governments, and real-time biometric identification in public spaces (with limited law enforcement exceptions).

These prohibitions took effect on February 2, 2025, marking the first wave of binding obligations under the legislation. Organizations found deploying such systems face severe consequences, demonstrating the EU's commitment to protecting fundamental rights from the outset.

AI Literacy Requirements

Also effective from February 2, 2025, Article 4 mandates that providers and deployers ensure sufficient AI literacy among staff and operators. AI literacy encompasses the ability to make informed decisions regarding AI deployment and risks, understanding potential harms, and handling systems responsibly. Training must be tailored to technical expertise, deployment context, and characteristics of impacted individuals or groups.

High-Risk AI System Obligations

High-risk AI systems face the most stringent requirements under the act. These obligations include requirements for data training and governance, technical documentation, recordkeeping, technical robustness, transparency, human oversight, and cybersecurity.

Specifically, providers of high-risk systems must establish quality data training sets that are representative, relevant, and error-free. They must create comprehensive technical documentation demonstrating compliance, implement risk management systems throughout the AI lifecycle, ensure appropriate human oversight mechanisms, and maintain accuracy, robustness, and cybersecurity protections.

High-risk AI systems encompass applications in critical infrastructure, educational and vocational training, employment management, access to essential services, law enforcement, migration management, and administration of justice. All high-risk systems must be registered in an EU database and assessed before market placement and throughout their lifecycle.

General-Purpose AI Model Requirements

Starting August 2, 2025, providers of general-purpose AI models face specific regulatory requirements, including maintaining technical documentation, preparing transparency reports, publishing training data summaries, and ensuring copyright compliance.

The training data summary must include data types, sources, and preprocessing methods, with all use of copyright-protected content documented and legally permissible. These transparency measures ensure accountability for models like ChatGPT, Claude, and other large language models that serve as foundations for numerous downstream applications.

Extended obligations apply to particularly powerful GPAI models classified as systemic based on computing power, range, or potential impact. Providers of systemic-risk models must report systems to the European Commission, undergo structured evaluation and testing procedures, and permanently document security incidents.

Transparency and Documentation

The act introduces specific disclosure obligations ensuring humans are informed when interacting with AI systems like chatbots, enabling informed decision-making. Providers of generative AI must ensure AI-generated content is identifiable, addressing concerns about deepfakes and synthetic media.

Documentation requirements are extensive, particularly for high-risk systems. Organizations must maintain detailed records of system development, training methodologies, data sources, testing procedures, and performance metrics. This documentation serves multiple purposes: demonstrating compliance to regulators, enabling effective oversight, and facilitating incident investigation when problems arise.

Governance and Oversight Structures

Companies must establish complete AI inventories with risk classification, clarify organizational roles, prepare necessary technical and transparency documentation, implement copyright and data protection requirements, train employees on AI competence, and adapt internal governance structures.

The governance framework includes designating responsible persons within organizations, establishing clear chains of accountability, and implementing post-market monitoring systems. These structural measures ensure ongoing compliance rather than treating it as a one-time checkbox exercise.

What Are the Advantages of The EU AI Act?

While compliance requirements may initially appear burdensome, the EU AI Act delivers substantial benefits to organizations, consumers, and society at large.

Building Consumer Trust and Market Access

The act aims to strike a balance between fostering AI adoption while upholding individuals' rights to responsible, ethical, and trustworthy AI use. By establishing clear standards, the legislation helps build public confidence in AI technologies, potentially accelerating adoption and market growth.

Organizations demonstrating compliance gain a competitive advantage, particularly when serving privacy-conscious European consumers. The "trustworthy AI" certification becomes a valuable differentiator in increasingly crowded markets, similar to how GDPR compliance became a selling point for data-handling services.

Encouraging Innovation Through Clarity

AI creates many benefits, including better healthcare, safer and cleaner transport, more efficient manufacturing, and cheaper and more sustainable energy. The risk-based approach ensures that minimal-risk AI applications which comprise the vast majority of current systems remain largely unregulated, allowing innovation to continue unimpeded.

By providing clear rules and expectations, the act reduces regulatory uncertainty that can stifle innovation. Organizations know precisely what is required, enabling them to design compliant systems from inception rather than retrofitting compliance after development. This clarity particularly benefits small and medium-sized enterprises that lack extensive legal resources.

Establishing Global Standards

Just as GDPR set the global benchmark for data protection, the EU AI Act is positioning itself as the international standard for AI regulation. Organizations that achieve compliance with European requirements will be well-positioned for emerging regulations in other jurisdictions, many of which are already using the EU framework as a reference point.

This harmonization reduces the complexity and cost of compliance across multiple markets, creating economies of scale for multinational organizations. Rather than adapting to dozens of different regulatory regimes, companies can build to one comprehensive standard that satisfies requirements globally.

Promoting Responsible AI Development

The act addresses key risks including lack of transparency in AI decision-making, accountability gaps, and health and safety concerns in critical sectors. By mandating risk assessments, human oversight, and technical compliance, the legislation pushes organizations toward more responsible development practices.

These requirements often result in better-designed systems that are more robust, reliable, and aligned with human values. The focus on explainability and transparency can actually improve AI performance by forcing developers to understand and articulate how their systems function, leading to more effective debugging and optimization.

Mitigating Legal and Reputational Risks

Non-compliance with the EU AI Act carries significant penalties, with fines reaching up to €35 million or 7% of global annual turnover, whichever is higher. Beyond financial penalties, non-compliance can result in severe reputational damage, loss of market access, and erosion of consumer trust.

Proactive compliance protects organizations from these risks while demonstrating commitment to ethical AI practices. In an era where corporate responsibility increasingly influences consumer choices and investor decisions, such commitment delivers tangible business value beyond mere regulatory adherence.

How Is Automation Shaping the EU AI Act?

Automation technologies are fundamentally transforming both the implementation of and compliance with the EU AI Act, creating a dynamic interplay between regulatory requirements and technological solutions.

Automating Compliance Processes

AI can automate tedious or repetitive tasks such as data gathering, documentation, and monitoring, allowing compliance teams to focus on more strategic activities. Automated systems can continuously monitor AI deployments for compliance violations, track regulatory changes, and maintain the extensive documentation required under the act.

Compliance automation platforms can map regulatory requirements to internal controls, automatically generate audit trails, and flag potential non-compliance issues before they escalate. This proactive approach reduces the manual burden on compliance teams while increasing the reliability and comprehensiveness of compliance efforts.

Real-Time Monitoring and Risk Detection

AI systems can enter data, perform calculations, and execute maneuvers more quickly and efficiently than humans, playing a vital role in critical sectors like healthcare and aviation. Applied to compliance, these capabilities enable real-time monitoring of AI system performance, immediate identification of anomalies, and rapid response to potential compliance breaches.

Automated monitoring systems can track millions of AI interactions, flagging instances where systems may be exhibiting bias, generating inappropriate outputs, or operating outside defined parameters. This level of continuous oversight would be impossible to achieve through manual review, making automation essential for effective compliance at scale.

Streamlining Documentation and Reporting

The EU AI Act's extensive documentation requirements create significant administrative burdens. Automation technologies address this challenge by automatically generating and maintaining technical documentation, tracking data lineage, documenting model training processes, and compiling transparency reports.

Version control systems automatically document changes to AI models, data sources, and deployment configurations, creating the comprehensive audit trail required by regulators. Natural language generation can transform technical specifications into human-readable transparency reports, satisfying disclosure obligations while reducing manual writing time.

Enhancing Data Governance

Establishing a single source of truth for data becomes a regulatory imperative under the EU AI Act to ensure data traceability. Automated data governance platforms consolidate data management activities, track data quality, monitor data usage for copyright compliance, and ensure appropriate data protection measures.

These platforms provide the visibility and control necessary to demonstrate compliance with data governance requirements, particularly for high-risk AI systems where data quality directly impacts safety and fairness.

Facilitating Impact Assessments

High-risk AI systems require comprehensive risk and fundamental rights impact assessments. Automation tools can streamline these assessments by systematically evaluating AI systems against regulatory criteria, identifying potential biases or discrimination, assessing impacts on fundamental rights, and generating assessment documentation.

While human judgment remains essential for contextual and ethical considerations, automated tools provide consistent frameworks and comprehensive analysis that enhance the quality and reliability of impact assessments.

Enabling Adaptive Compliance

The judicious use of tools like master data management and data governance software can significantly reduce the burden of compliance, particularly when automation spans workflows and functions across environments. As regulations evolve and new requirements emerge, automated compliance systems can be rapidly updated to reflect new obligations, ensuring ongoing compliance without complete system redesigns.

This adaptability is crucial given the phased implementation of the EU AI Act and the likelihood of future amendments as AI technology continues to evolve. Automated systems can absorb these changes more efficiently than manual processes, providing organizations with the agility needed to remain compliant in a dynamic regulatory landscape.

FAQs

When does the EU AI Act take full effect?

The act entered into force on August 1, 2024, and will be fully applicable by August 2, 2026, with exceptions: prohibitions and AI literacy obligations became effective February 2, 2025, governance rules and GPAI model obligations became applicable August 2, 2025, and high-risk system rules embedded in regulated products have an extended transition until August 2, 2027.

Does the EU AI Act apply to companies outside Europe?

Yes, the act has extraterritorial reach similar to GDPR. It applies to any organization deploying AI systems that affect users located within the EU, regardless of where the provider is based. This means companies worldwide must comply if they serve European markets.

What are the penalties for non-compliance?

AI Act violations may be punished with significant penalties, including fines of up to €35 million or 7% of global annual turnover, whichever is higher. The specific penalty depends on the nature and severity of the violation, with prohibited practices attracting the highest fines.

Are all AI systems regulated under the act?

No, the risk-based approach means most AI systems face minimal or no regulation. The vast majority of AI systems currently used in the EU fall into the minimal risk category, including applications like AI-enabled video games and spam filters. Only systems posing significant risks face stringent requirements.

What is considered a high-risk AI system?

High-risk systems include those used in critical infrastructure, education, employment, essential services, law enforcement, migration management, and justice administration. Additionally, AI systems serving as safety components in products already subject to EU regulations may qualify as high-risk.

How do general-purpose AI models differ from high-risk AI systems?

General-purpose AI models are foundation models like ChatGPT or Claude that can perform many different tasks. They face horizontal transparency and documentation requirements. High-risk AI systems are specific applications deployed in sensitive contexts, facing more extensive obligations related to risk management, human oversight, and performance monitoring.

What is AI literacy and why is it required?

AI literacy is defined as the ability to make informed decisions regarding AI deployment and risks, understanding potential harms AI can cause. The requirement ensures that individuals operating AI systems have appropriate skills, knowledge, and understanding to use them responsibly.

Can companies use AI to help with EU AI Act compliance?

Absolutely. Many organizations are leveraging automation and AI-powered compliance tools to manage the act's requirements more efficiently. These technologies can monitor compliance, maintain documentation, track regulatory changes, and flag potential issues, significantly reducing manual compliance burdens.

What support is available for small and medium-sized enterprises?

The German Federal Network Agency has established an AI Service Desk to serve as a first point of contact for small and medium-sized enterprises, particularly for questions relating to the act's practical implementation. Similar support mechanisms are being established across member states.

How often will regulations be updated?

The EU AI Act includes provisions for codes of practice, technical standards, and guidelines that will evolve as AI technology advances. Organizations should regularly monitor regulatory developments and adapt compliance strategies accordingly. The Commission and national authorities will provide ongoing guidance to support implementation.

Conclusion

The EU AI Act represents a watershed moment in technology regulation, establishing the first comprehensive legal framework governing artificial intelligence at a societal level. By adopting a nuanced, risk-based approach, the legislation balances the need to protect fundamental rights and public safety with the imperative to foster innovation and maintain Europe's competitive position in AI development.

For organizations worldwide, understanding and complying with the EU AI Act is essential for market access, consumer trust, and long-term sustainability. The phased implementation provides time for preparation, but the message is clear: the era of unregulated AI is ending, and organizations must adapt to this new reality.

The key requirements, from prohibited practices and AI literacy to high-risk system obligations and general-purpose AI model transparency create a comprehensive accountability framework that touches every aspect of AI development and deployment. While these requirements present challenges, they also deliver significant advantages, including enhanced consumer trust, clearer innovation pathways, reduced legal risks, and alignment with emerging global standards.

Automation technologies are proving invaluable in managing compliance complexity, enabling real-time monitoring, streamlining documentation, and facilitating adaptive responses to evolving requirements. Organizations that embrace these tools position themselves not just for compliance, but for competitive advantage in an increasingly regulated landscape.

As we move toward full implementation in 2026, the EU AI Act will continue shaping the global conversation about responsible AI. Organizations that proactively embrace its principles; trustworthiness, transparency, accountability, and human-centric design will not only comply with European requirements but will be better prepared for the inevitable expansion of AI regulation worldwide.

Don't wait until enforcement actions begin. Partner with Regulance today to transform compliance from a burden into a competitive advantage. Our intelligent automation platform ensures you're always audit-ready, allowing your team to focus on innovation while we handle the complexity of regulatory compliance.

Visit Regulance.io to schedule a demo and discover how our platform can simplify your EU AI Act compliance journey.