Is Your Cybersecurity Strategy Ready for the EU AI Act? Here’s What You Need to Know

Introduction

Artificial intelligence has become deeply woven into the fabric of our daily lives. From facial recognition systems at airports to algorithms that decide loan approvals, AI technologies are making critical decisions that affect millions of people every single day. But as these systems grow more powerful and pervasive, a troubling question emerges: who's ensuring they're secure from cyberattacks and malicious manipulation?

The answer is increasingly found in the EU AI Act, the world's first comprehensive legislative framework specifically designed to regulate artificial intelligence. Adopted in 2024, this landmark regulation is fundamentally changing the relationship between AI development and cybersecurity. It's not simply adding another layer of compliance requirement; it's establishing entirely new standards for how AI systems must be protected, monitored, and secured throughout their entire lifecycle.

What makes the EU AI Act particularly significant for cybersecurity is its mandatory approach. Unlike voluntary guidelines or industry best practices, this legislation carries substantial legal weight, with penalties reaching up to €35 million or 7% of global annual turnover for serious violations. Companies can no longer treat AI security as an afterthought or a nice-to-have feature.

The implications stretch far beyond European borders. If you're a multinational corporation, a growing startup, or a cybersecurity professional, the EU AI Act's requirements are reshaping how AI systems must be designed, developed, and defended against emerging threats. Understanding this intersection between the EU AI Act and cybersecurity has become critical for anyone working with or affected by artificial intelligence technologies.

This guide explores how the EU AI Act is revolutionizing cybersecurity practices, what it means for businesses and individuals, and why these changes matter for the future of safe and trustworthy AI.

What is the EU AI Act?

The EU AI Act, officially adopted in 2024, represents the European Union's ambitious attempt to regulate artificial intelligence before the technology races too far ahead of legal frameworks. It is the GDPR of the AI world, a comprehensive rulebook that sets clear boundaries for how AI can be developed, deployed, and used across all 27 EU member states.

The EU AI Act takes a risk-based approach to regulation. Not all AI systems are created equal, and the Act recognizes this by categorizing AI applications into four distinct risk levels: unacceptable risk (banned outright), high risk (heavily regulated), limited risk (requiring transparency), and minimal risk (largely unregulated).

What makes this legislation particularly powerful is its extraterritorial reach. Even if your company is headquartered in Silicon Valley or Tokyo, if you're offering AI systems to EU citizens or your AI generates outputs used within the EU, you're within the Act's scope. This global impact means that the EU AI Act isn't just European law; it's becoming a de facto global standard.

The Act places special emphasis on what it calls "high-risk" AI systems, which include applications in critical infrastructure, education, employment, law enforcement, and border control. These systems face stringent requirements around data quality, transparency, human oversight, and crucially for our discussion which is cybersecurity.

What is Cybersecurity?

Cybersecurity is the practice of protecting computer systems, networks, programs, and data from digital attacks, unauthorized access, damage, or theft. But it's evolved far beyond just installing antivirus software or creating strong passwords. Modern cybersecurity encompasses a complex ecosystem of technologies, processes, and practices designed to safeguard digital assets in an increasingly connected world.

In the AI era, cybersecurity takes on new dimensions. It protects the algorithms that learn from that data, the models that make predictions, and the decision-making processes that affect real people's lives. AI systems themselves can be targets of sophisticated attacks like adversarial examples, data poisoning, model theft, and backdoor attacks.

Cybersecurity today involves protecting against threats like ransomware attacks that can cripple entire organizations, phishing schemes that exploit human psychology, supply chain vulnerabilities that compromise software before it even reaches users, and insider threats from within organizations. When you add AI into this mix, the stakes get even higher because compromised AI systems can make thousands of flawed decisions before anyone notices something's wrong.

The relationship between AI and cybersecurity is bidirectional: AI can be a powerful tool for cybersecurity defense, detecting threats at speeds no human analyst could match, but AI systems themselves need robust cybersecurity measures to prevent them from becoming weapons in the wrong hands.

What Are the Key Areas of Impact?

The EU AI Act's influence on cybersecurity spans multiple critical domains, creating ripples across the entire digital ecosystem. Let's explore the most significant areas where this legislation is making its mark:

AI System Security by Design

The Act mandates that high-risk AI systems must be designed and developed with security built in from the ground up. This "security by design" principle means companies must conduct thorough risk assessments during development, implement robust security architectures, and continuously test for vulnerabilities before deployment. Gone are the days when companies could launch AI products and worry about security later.

Data Governance and Protection

Since AI systems are only as good as the data they're trained on, the EU AI Act places heavy emphasis on data security throughout the AI lifecycle. This includes protecting training datasets from tampering or poisoning, ensuring that data collection and processing comply with existing privacy regulations, securing data storage and transmission channels, and maintaining data integrity during model training and updates. Any breach in data security could compromise the entire AI system, making this a critical focus area.

Supply Chain Security

Modern AI systems rarely exist in isolation, they depend on complex supply chains of software libraries, pre-trained models, cloud services, and third-party components. The EU AI Act recognizes this reality and requires companies to assess and document the security of their entire AI supply chain. This means verifying the security credentials of all vendors and partners, tracking the provenance of AI models and components, monitoring for vulnerabilities in third-party software, and maintaining transparency about external dependencies.

Incident Response and Monitoring

The Act requires organizations to establish robust mechanisms for detecting, reporting, and responding to security incidents. Companies must implement continuous monitoring systems, maintain incident response protocols, report serious incidents to authorities, and keep detailed logs for investigation purposes.

Transparency and Accountability

Security through obscurity is no longer acceptable under the EU AI Act. High-risk AI systems must maintain comprehensive documentation about their security measures, undergo regular security audits and assessments, provide clear information about potential security risks, and maintain accountability chains for security decisions. This transparency helps both regulators and users understand and trust the security of AI systems.

Key Provisions of the EU AI Act

Several specific provisions within the EU AI Act directly address cybersecurity concerns, creating a framework that's both comprehensive and enforceable:

Article 15: Accuracy, Robustness and Cybersecurity

This cornerstone provision explicitly requires high-risk AI systems to be resilient against errors, faults, and inconsistencies during their entire lifecycle. More importantly, it mandates that these systems must be resistant to unauthorized third parties attempting to alter their use, outputs, or performance through exploiting system vulnerabilities. This provision essentially makes cybersecurity a legal requirement, not a best practice.

The robustness requirement extends beyond traditional cybersecurity to include resistance against attempts to manipulate the system through adversarial inputs or exploitation of training data. Companies must demonstrate that their AI systems can maintain security and performance even under attack.

Risk Management Requirements

The Act requires providers of high-risk AI systems to establish and maintain a continuous risk management system throughout the AI lifecycle. From a cybersecurity perspective, this means regularly identifying potential security vulnerabilities, assessing the likelihood and severity of security breaches, implementing appropriate mitigation measures, and documenting all risk management activities.

This provision creates a living, breathing approach to AI security rather than a one-time compliance exercise.

Technical Documentation Obligations

Organizations must maintain detailed technical documentation that demonstrates compliance with the Act's requirements. For cybersecurity, this includes documenting the system's security architecture and design choices, describing security testing methodologies and results, maintaining records of identified vulnerabilities and remediation efforts, and tracking changes to the system that might affect security.

This documentation must be kept up-to-date throughout the system's lifecycle and made available to authorities upon request.

Conformity Assessments

Before placing high-risk AI systems on the market, providers must undergo conformity assessments to verify compliance with the Act's requirements. These assessments specifically evaluate cybersecurity measures, forcing companies to prove their AI systems meet security standards before deployment. For certain high-risk applications, third-party assessments by notified bodies are required, adding an independent verification layer.

Post-Market Monitoring

The Act recognizes that security doesn't end at deployment. Providers must establish post-market monitoring systems that track the performance and security of AI systems in real-world use, collect and analyze data about potential security incidents, implement corrective actions when vulnerabilities are discovered, and report serious incidents to market surveillance authorities.

Penalties for Non-Compliance

To ensure these provisions have teeth, the EU AI Act establishes significant penalties for violations. Companies can face fines of up to €35 million or 7% of global annual turnover for the most serious violations, including deploying prohibited AI systems or failing to meet cybersecurity requirements for high-risk systems. Even lesser violations can result in substantial fines, creating strong financial incentives for robust cybersecurity practices.

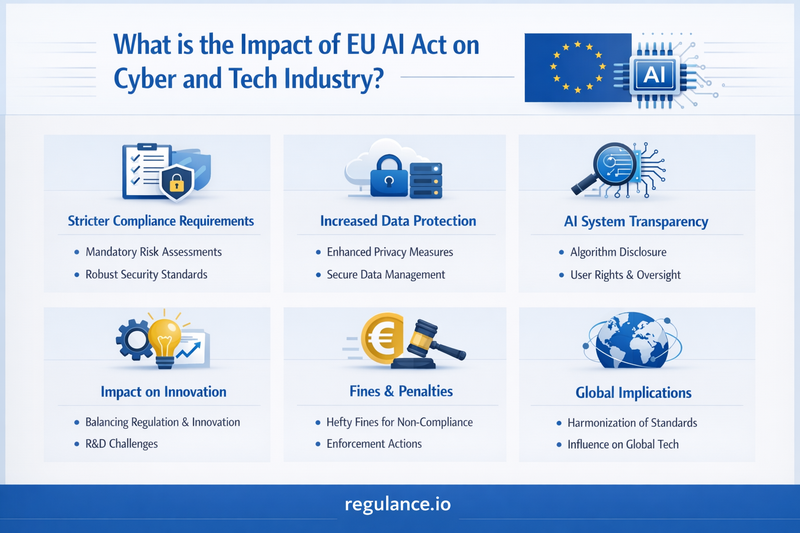

What is the Impact of EU AI Act on Cyber and Tech Industry?

The EU AI Act is fundamentally reshaping how the cybersecurity and technology industries operate, creating both challenges and opportunities:

Increased Investment in AI Security

Companies are dramatically increasing their cybersecurity budgets specifically for AI systems. This includes hiring specialized AI security professionals, investing in security testing tools and platforms, conducting regular third-party security audits, and implementing advanced monitoring and detection systems. The market for AI security solutions is experiencing explosive growth as companies scramble to meet compliance requirements.

Shift Toward Security-First Development

The Act is accelerating the adoption of "secure by design" principles across the tech industry. Development teams are integrating security considerations from the earliest stages of AI development, conducting threat modeling during the design phase, implementing security testing throughout the development cycle, and prioritizing security features even when they add complexity or cost.

This represents a cultural shift from the traditional "move fast and break things" mentality to a more measured, security-conscious approach.

New Business Opportunities

While compliance creates costs, it's also spawning new business opportunities. We're seeing the emergence of specialized AI security consulting firms, development of compliance management platforms specifically for the EU AI Act, growth in third-party assessment and certification services, and creation of security solutions tailored to AI systems. Forward-thinking companies are turning compliance requirements into competitive advantages.

Supply Chain Transformation

The Act's supply chain security requirements are forcing organizations to fundamentally rethink their vendor relationships and procurement processes. Companies are conducting more thorough security assessments of suppliers, implementing stricter contractual security requirements, developing supply chain risk management programs, and sometimes choosing to build capabilities in-house rather than rely on third parties with uncertain security postures.

Innovation Impact

There's ongoing debate about whether the EU AI Act will stifle innovation or channel it in more responsible directions. On one hand, compliance costs and regulatory uncertainty can slow development, particularly for startups and smaller companies. On the other, the Act is spurring innovation in security technologies, creating demand for solutions that enable both compliance and innovation, and potentially giving compliant EU companies competitive advantages in security-conscious markets.

Global Standardization

Much like GDPR became the de facto global privacy standard, the EU AI Act is influencing AI regulation worldwide. Companies developing AI systems often choose to meet EU standards globally rather than maintaining different versions for different markets. This "Brussels Effect" is effectively globalizing European cybersecurity standards for AI.

Competitive Dynamics

The Act is reshaping competitive dynamics within the tech industry. Large companies with substantial resources may find it easier to absorb compliance costs, potentially creating barriers to entry for smaller competitors. However, companies that excel at compliance and security may differentiate themselves in the market, and new players offering compliance-enabling solutions have opportunities to challenge incumbents.

Impacts of EU AI Act on Individuals

While much of the discussion around the EU AI Act focuses on its impact on companies, the real beneficiaries should be individuals. Here's how the Act's cybersecurity provisions are designed to protect ordinary people:

Enhanced Protection Against AI-Related Cyber Threats

The Act's security requirements mean that AI systems you interact with, whether applying for a loan, using healthcare diagnostics, or engaging with customer service; should be more resistant to cyberattacks that could compromise your personal information or lead to harmful decisions. Adversarial attacks that could manipulate AI decisions about you become harder to execute, and data poisoning attempts that could bias AI systems against certain groups face more barriers.

Greater Transparency

The Act gives individuals clearer information about the AI systems that affect their lives and the security measures protecting them. You have the right to know when you're interacting with a high-risk AI system, understand the potential risks and security measures in place, and access information about how your data is protected within AI systems.

This transparency empowers individuals to make informed decisions about their interactions with AI.

Accountability When Things Go Wrong

If an AI system makes a wrong decision due to a security breach or vulnerability, the Act establishes clear accountability chains. Companies must report serious security incidents, individuals can seek remedies when AI security failures harm them, and regulatory authorities have powers to investigate and enforce penalties.

Reduced Risk of Discrimination

Security vulnerabilities in AI systems can be exploited to introduce bias or discrimination. By requiring robust security measures, the EU AI Act helps protect against attacks designed to manipulate AI systems into discriminatory behavior. Data integrity protections reduce the risk of training data manipulation that could embed biases, and monitoring requirements help detect when AI systems are behaving unfairly.

Trust in AI Technologies

Perhaps most importantly, the Act's comprehensive approach to AI security is designed to build public trust in AI technologies. When people trust that AI systems are secure and reliable, they're more likely to benefit from AI innovations in healthcare, education, finance, and other domains. The Act aims to create an environment where AI development can flourish while maintaining public confidence.

Potential Downsides

It's worth noting that individuals might experience some trade-offs. Compliance costs could lead to higher prices for AI-powered services, particularly in the short term. Some innovative AI applications might be delayed or never reach the market due to compliance challenges. And the documentation and assessment requirements might slow the deployment of beneficial AI applications.

However, most consumer advocates argue that these trade-offs are worthwhile if they result in safer, more secure AI systems that respect individual rights.

FAQs

When does the EU AI Act take effect?

The EU AI Act was adopted in 2024, but implementation is phased. Prohibitions on unacceptable AI practices took effect six months after entry into force. Requirements for high-risk AI systems apply 24 months after entry into force. Companies should begin compliance preparations immediately rather than waiting for deadlines.

Does the EU AI Act apply to companies outside Europe?

Yes, if you offer AI systems to people in the EU or if your AI system's outputs are used in the EU, the Act applies to you regardless of where your company is based. This extraterritorial scope is similar to GDPR.

What happens if an AI system has a security breach?

Providers must report serious incidents to market surveillance authorities, investigate the root cause and implement fixes, notify affected users when appropriate, and potentially face regulatory investigations and penalties. The specific requirements depend on the severity and nature of the breach.

How does the EU AI Act differ from GDPR?

While GDPR focuses on personal data protection, the EU AI Act specifically regulates AI systems. However, they complement each other, AI systems that process personal data must comply with both regulations. The EU AI Act has broader cybersecurity requirements beyond just data protection.

Are all AI systems subject to the same requirements?

No, the Act uses a risk-based approach. Only high-risk AI systems face the strictest cybersecurity and other requirements. Minimal and limited-risk AI systems have lighter obligations. The risk classification depends on the AI system's intended purpose and potential impact.

What counts as a high-risk AI system?

High-risk systems include AI used in critical infrastructure, educational settings, employment decisions, access to essential services, law enforcement, migration management, and administration of justice. The Act provides detailed lists of high-risk applications.

Can small companies comply with the EU AI Act?

The Act includes some provisions to support SMEs, including access to regulatory sandboxes, reduced conformity assessment fees, and support from national authorities. However, small companies developing high-risk AI systems still face significant compliance obligations.

How much will compliance cost?

Costs vary dramatically depending on the AI system's risk level, complexity, and current security posture. Estimates range from tens of thousands to millions of euros for high-risk systems. Ongoing compliance costs must also be considered.

What role do cybersecurity standards play?

The Act references harmonized European standards that, when followed, create a presumption of compliance. Following recognized cybersecurity standards for AI systems can simplify the compliance process.

What should companies do to prepare?

Start by assessing which of your AI systems fall under the Act's scope and risk categories, conducting gap analyses between current practices and Act requirements, developing or enhancing AI security programs, establishing governance structures for AI compliance, and training teams on the Act's requirements and implications.

Conclusion

The EU AI Act represents a watershed moment in the evolution of artificial intelligence governance, placing cybersecurity at the heart of responsible AI development and deployment. By mandating security by design, establishing clear accountability frameworks, and creating enforceable standards, the Act is pushing the entire AI ecosystem toward more secure and trustworthy systems.

For the cybersecurity and tech industries, this legislation creates both challenges and opportunities. Compliance requires significant investment in security capabilities, processes, and documentation. But companies that rise to meet these challenges will differentiate themselves in an increasingly security-conscious market, build stronger customer trust, and help shape the future of responsible AI.

For individuals, the Act promises enhanced protection against AI-related security threats, greater transparency about the systems that affect their lives, and stronger accountability when things go wrong. While the full impact will only become clear over time, the direction is unmistakably toward putting people's safety and security first.

The EU AI Act is fundamentally reshaping the relationship between artificial intelligence, cybersecurity, and society. As implementation unfolds over the coming years, organizations that treat compliance as an opportunity rather than a burden will be best positioned to thrive in the emerging AI landscape.

Ready to Navigate EU AI Act Compliance? Contact Regulance today for a comprehensive AI compliance assessment and discover how we can help you build secure, compliant, and trustworthy AI systems that meet the EU AI Act's standards while driving your business forward.