Is Shadow AI Undermining Your Compliance Efforts? Here’s How to Fix It

Introduction

Your employees are using artificial intelligence tools right now and chances are, you have no idea which ones or what data they're feeding into them. This invisible technology adoption, known as shadow AI, has become one of the fastest-growing security and compliance challenges facing businesses today.

Shadow AI occurs when employees adopt artificial intelligence tools without approval from IT departments, security teams, or organizational leadership. Unlike traditional software that required downloads and installations, modern AI tools are accessible through web browsers in seconds. A marketing manager can start using an AI writing assistant, a developer can tap into an AI code generator, and an HR professional can employ an AI resume screener, all without a single approval request or security review.

The numbers paint a concerning picture. Recent research indicates that up to 80% of employees are using AI tools without their employer's knowledge. While these workers typically have good intentions, seeking productivity gains and competitive advantages; they're unknowingly creating serious vulnerabilities. Every unauthorized AI interaction potentially exposes sensitive customer data, proprietary information, and intellectual property to third-party systems operating outside your organization's control.

The challenge isn't just about unauthorized technology use. Shadow AI fundamentally undermines compliance frameworks, creates invisible data flows that auditors can't trace, and introduces security gaps that traditional monitoring systems weren't designed to detect. For organizations operating under regulations like GDPR, HIPAA, or financial industry standards, shadow AI represents a compliance crisis hiding in plain sight.

This article explores what shadow AI really means for your organization and, more importantly, how to address it without crushing the innovation that makes AI valuable in the first place.

What is Shadow AI?

Shadow AI refers to artificial intelligence tools, applications, and systems that employees use without formal approval, oversight, or knowledge from their organization's IT department, security teams, or leadership. It's the AI equivalent of shadow IT, a term that's been haunting IT departments for years but with significantly higher stakes.

These unauthorized AI tools can range from seemingly innocent applications to sophisticated systems capable of processing sensitive information. Common examples include:

- Generative AI chatbots like ChatGPT or Claude used for content creation, coding assistance, or problem-solving

- AI-powered writing assistants that help draft emails, reports, or marketing materials

- Image generation tools that create graphics, logos, or marketing visuals

- AI code completion tools that suggest or write code snippets

- Voice transcription services that convert meetings into text

- AI-enhanced productivity apps with built-in intelligent features

- Customer service bots implemented without security review

- Data analysis tools that use machine learning algorithms

The defining characteristic of shadow AI isn't necessarily that these tools are inherently dangerous or malicious. Rather, it's that they operate outside established governance frameworks, making it impossible for organizations to assess risks, ensure compliance, or maintain data security standards.

What makes shadow AI particularly challenging is its accessibility. Unlike traditional enterprise software that requires installation, procurement, and often significant investment, many AI tools are available for free or at low cost through web browsers. An employee can start using a powerful AI system in seconds without downloading anything or requesting budget approval and that's precisely the problem.

Why Do Organizations Face Shadow AI?

Understanding why shadow AI proliferates is essential to addressing it effectively. Employees don't typically adopt unauthorized tools out of malice; they're usually trying to work smarter and faster. Several factors drive this behavior:

The AI productivity gap is real and compelling. Employees who discover AI tools often experience dramatic improvements in their work efficiency. A task that took hours might now take minutes. When you've experienced that kind of productivity boost, returning to old methods feels like switching from a car back to walking. This creates powerful incentives to continue using these tools regardless of approval status.

Formal procurement processes move too slowly for the pace of AI innovation. Traditional IT approval processes were designed for a different era. By the time a tool goes through security review, budget approval, and vendor assessment, newer and better alternatives have emerged. Employees facing pressing deadlines can't wait months for approval, so they find workarounds.

There's a knowledge gap about AI risks. Many employees genuinely don't understand the security implications of feeding company data into AI systems. They see these tools as similar to search engines or calculators utilities rather than potential data leakage points. Without adequate training, they remain unaware that they're creating compliance vulnerabilities.

Organizations lack clear AI usage policies. Many companies haven't established comprehensive guidelines around AI tool adoption. In the absence of clear rules, employees make their own decisions about what's acceptable. If no one has explicitly said "don't use unauthorized AI tools," many employees assume it's permitted.

Competitive pressure creates urgency. When employees see competitors or peers at other companies using AI to work faster and produce better results, they feel pressure to keep pace. The fear of falling behind can override caution about policy compliance.

AI tools are designed for frictionless adoption. Unlike traditional enterprise software, modern AI tools prioritize user experience and immediate value delivery. They're specifically engineered to be irresistibly easy to use, with minimal barriers to entry. This design philosophy, while great for adoption, creates perfect conditions for shadow AI.

Remote work has reduced visibility. With distributed teams, IT departments have less insight into what tools employees are using. The laptop in someone's home office is a much less controllable environment than an office workstation, making unauthorized tool usage easier to hide.

What Are the Risks of Shadow AI?

The risks associated with shadow AI extend far beyond simple policy violations. These unauthorized tools can create serious vulnerabilities across multiple dimensions:

Data privacy breaches represent the most immediate threat. When employees input sensitive information; customer data, proprietary research, financial information, or trade secrets into unauthorized AI systems, they're essentially handing that data to third parties. Most AI tools use input data to train and improve their models, meaning your confidential information could later appear in responses to other users. This isn't theoretical; there have been documented cases of sensitive company information appearing in AI-generated responses after employees inadvertently fed it into public systems.

Intellectual property leakage can undermine competitive advantage. Engineers who use AI coding assistants might paste proprietary algorithms into prompts. Researchers might feed unpublished findings into AI tools for analysis. Marketing teams might share unreleased campaign strategies. Once this information enters an AI system, you've potentially lost control of it permanently. Competitors could theoretically access the same insights, and you may have no way to retrieve or delete the data.

Compliance violations can result in severe penalties. Organizations operating under regulations like GDPR, HIPAA, CCPA, or financial industry standards have strict requirements about data handling, storage, and processing. Shadow AI tools almost certainly don't meet these compliance standards, and their use could constitute regulatory violations carrying substantial fines and legal consequences.

Security vulnerabilities multiply without proper vetting. Unauthorized AI tools haven't undergone security assessments. They might have unpatched vulnerabilities, weak authentication mechanisms, or inadequate encryption. Each shadow AI tool represents another potential entry point for cyberattacks or data breaches.

Bias and accuracy issues can harm your brand and operations. AI systems can perpetuate biases or generate inaccurate information. When employees rely on unvetted AI tools for important decisions like hiring, customer communications, strategic planning, these biases and errors can lead to discriminatory practices, damaged customer relationships, or flawed business decisions.

Vendor lock-in and integration nightmares emerge over time. When multiple teams adopt different shadow AI tools, you create a fragmented technology landscape. Eventually, you'll need to rationalize these tools, migrate data, or integrate system’s processes that become exponentially more difficult and expensive when dealing with unauthorized implementations.

Audit trails disappear, making incident response nearly impossible. If something goes wrong, a data breach, a compliance violation, a customer complaint investigating the root cause requires understanding what tools were used and how. Shadow AI creates blind spots that make effective incident response and forensic analysis extremely difficult.

How Does Shadow AI Complicate Compliance?

Compliance frameworks weren't designed with shadow AI in mind, yet these unauthorized tools create complications across virtually every regulatory requirement:

Data governance becomes impossible without complete visibility. Regulations like GDPR require organizations to know where personal data is stored, how it's processed, who has access, and how long it's retained. Shadow AI makes answering these fundamental questions impossible. You can't protect data you don't know has been shared, and you can't comply with data subject access requests if you don't know which systems contain personal information.

Third-party risk management frameworks break down. Most compliance standards require organizations to assess and monitor third-party vendors who process sensitive data. Shadow AI tools are, by definition, unassessed third parties. This creates gaps in your third-party risk management program and potential audit findings.

Data residency requirements become unenforceable. Many regulations require that certain types of data remain within specific geographic boundaries. When employees use shadow AI tools, data might be processed on servers in unknown locations, potentially violating data residency requirements without anyone's knowledge.

Consent and purpose limitation principles get violated. GDPR and similar regulations require that personal data be collected for specific, legitimate purposes and not used in ways individuals haven't consented to. When employees feed customer data into AI tools for analysis or content generation, they're likely using that data for purposes beyond what customers agreed to.

Data minimization standards become meaningless. Compliance frameworks emphasize collecting and retaining only necessary data. Shadow AI encourages the opposite behavior—employees might paste entire datasets into AI tools when only a subset is actually needed, violating data minimization principles.

Audit evidence becomes incomplete and unreliable. Compliance audits require comprehensive documentation of data flows, access controls, and processing activities. Shadow AI creates undocumented data flows and processing that won't appear in audit logs, making it impossible to provide complete evidence of compliance.

The right to erase requests becomes unmanageable. Under GDPR and similar regulations, individuals can request deletion of their personal data. If that data has been fed into shadow AI systems, you may have no way to honor these requests, creating direct regulatory violations.

Security incident notification timelines become impossible to meet. Many regulations require notifying authorities and affected individuals within specific timeframes after discovering a data breach. If you don't know about shadow AI tools, you can't detect breaches involving them, making timely notification impossible.

The fundamental problem is that compliance requires control, visibility, and documentation, precisely what shadow AI eliminates. Every unauthorized AI tool represents a potential compliance gap, and the cumulative effect can transform a generally compliant organization into one riddled with violations.

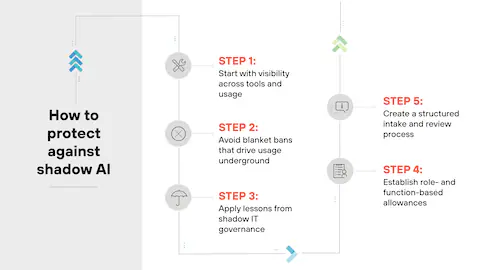

6 Strategies for Combating Shadow AI

Addressing shadow AI requires a balanced approach that acknowledges legitimate employee needs while protecting organizational interests. Here are six comprehensive strategies:

1. Establish Clear, Practical AI Governance Policies

Create explicit policies that define acceptable AI tool usage without being so restrictive that employees feel compelled to work around them. Your policy should address which types of AI tools are permitted, what data can be input into AI systems, approval processes for new tools, and consequences for violations. Importantly, making these policies accessible and understandable to complex legal documents that nobody reads won't change behavior. Consider creating simple decision trees or flowcharts that help employees quickly determine whether a specific AI use case is acceptable.

2. Provide Approved AI Tool Alternatives

The most effective way to combat shadow AI is to eliminate the need for it. Evaluate popular AI tools and provide approved, enterprise-grade alternatives that meet security and compliance standards while delivering the productivity benefits employees seek. If employees are using ChatGPT for writing assistance, implement an enterprise AI writing tool with proper data protections. If they're using image generators, provide approved alternatives. When employees have sanctioned tools that actually meet their needs, they're far less likely to seek unauthorized alternatives.

3. Implement Comprehensive AI Literacy Training

Many shadow AI problems stem from ignorance rather than malice. Develop training programs that help employees understand AI capabilities, limitations, and risks. Explain why inputting certain data into AI tools is problematic, illustrate real-world consequences of data leakage, and teach people how to use AI responsibly. Make this training engaging and practical rather than fear-based and theoretical. Include specific examples relevant to different roles; what a marketing person needs to know differs from what an engineer needs to understand.

4. Deploy Technical Detection and Prevention Controls

Use technology to identify and, where appropriate, block unauthorized AI tool usage. Network monitoring can detect connections to known AI services, data loss prevention (DLP) tools can flag when sensitive information is being transmitted to unauthorized destinations, and browser extensions or endpoint detection systems can provide visibility into which web-based AI tools employees are accessing. However, be thoughtful about how you implement these controls, overly aggressive blocking can drive behavior further underground or create workarounds that are even less secure.

5. Create Fast-Track Approval Processes for AI Tools

Recognize that traditional procurement timelines don't match the pace of AI innovation. Establish expedited evaluation processes for AI tools that employees request. Create a cross-functional AI review team with representatives from IT, security, legal, and compliance who can assess tools quickly. Develop risk-based evaluation frameworks; low-risk tools might get approved in days, while high-risk ones undergo more thorough review. Publicize these processes and actual approval times to demonstrate that following proper channels doesn't mean endless delays.

6. Foster a Culture of Transparency and Collaboration

Create an environment where employees feel comfortable disclosing AI tool usage rather than hiding it. Establish channels for employees to report tools they've discovered or want to use without fear of punishment. Consider implementing an "amnesty period" where employees can come forward about shadow AI tools they've been using without facing consequences. Involving employees in AI governance decisions and their input about which tools actually help them work better is valuable. When people feel heard and see that the organization is genuinely trying to enable productive AI use safely, they're more likely to work within approved frameworks.

These strategies work best in combination. Technology alone won't solve the problem, nor will policies or training in isolation. The most successful approaches integrate all these elements into a coherent AI governance program that balances innovation with risk management.

FAQs

Is all unauthorized AI usage considered shadow AI?

Yes, shadow AI specifically refers to any AI tool or system used without proper authorization and oversight from IT, security, or leadership. Even if the tool itself is legitimate and widely used, if it's being used without following your organization's approval processes, it constitutes shadow AI.

Can shadow AI ever be beneficial to organizations?

While shadow AI creates significant risks, it sometimes reveals genuine gaps in your approved tool portfolio. Employees adopting unauthorized AI tools are essentially conducting user research identifying productivity bottlenecks and finding solutions. Smart organizations monitor shadow AI trends to inform their technology strategy, then provide approved alternatives that meet those demonstrated needs.

How can small businesses with limited resources address shadow AI?

Small organizations can start with fundamentals: establish clear policies about AI usage, conduct basic training about data sensitivity, and implement at least one or two approved AI tools for common use cases. Focus on creating a culture where employees ask before adopting new tools. You don't need enterprise-grade monitoring systems; even a simple policy of "check with IT first" can dramatically reduce shadow AI risks if consistently reinforced.

What should I do if I discover employees using shadow AI tools?

Approach the situation as a learning opportunity rather than immediately disciplining employees. Understand why they adopted the tool, what problem were they trying to solve? Assess what data they've shared with the system. Determine if approved alternatives exist or if you need to evaluate the tool for official adoption. Use the discovery to improve your AI governance program rather than simply punishing the behavior.

Do AI detection tools work for identifying shadow AI?

AI detection tools primarily identify AI-generated content, not AI tool usage. To detect shadow AI, you need network monitoring, endpoint detection, and data loss prevention systems that track which external services employees are connecting to and what information is being transmitted. Cloud access security brokers (CASBs) can be particularly effective for identifying unauthorized cloud-based AI services.

How often should AI usage policies be updated?

Given the rapid pace of AI development, review your AI governance policies at least quarterly. Major AI announcements, new regulatory guidance, or changes in your organization's risk appetite should trigger immediate policy reviews. Maintain a living document approach where minor updates happen continuously rather than waiting for annual comprehensive overhauls.

Conclusion

Shadow AI represents one of the most significant governance challenges facing modern organizations. Unlike traditional shadow IT, these unauthorized AI tools process and learn from your data in ways that create unprecedented risks to privacy, security, intellectual property, and compliance. The stakes are simply too high to ignore.

Yet the solution isn't to declare war on AI or implement draconian controls that stifle innovation and frustrate employees. The organizations that successfully navigate shadow AI are those that recognize legitimate employee needs, provide approved alternatives, establish clear but practical policies, and foster cultures where transparency is rewarded rather than punished.

The reality is that AI tools are transformative, and employees who've experienced their benefits won't willingly return to less efficient workflows. Your choice isn't between allowing shadow AI and preventing AI usage altogether; it's between managing AI adoption strategically or allowing it to happen chaotically.

By implementing comprehensive AI governance frameworks, providing adequate training, offering approved tools, and maintaining open dialogue with employees, you can capture the productivity benefits of AI while maintaining the control necessary for compliance and security. The key is moving fast enough to meet employee needs while maintaining the guardrails that protect your organization.

Schedule a demo today and discover how Regulance can transform shadow AI from a hidden threat into a managed opportunity. Visit Regulance.io to learn more about protecting your organization in the age of artificial intelligence.