EU AI Act: How Does It Address the Dangers of AI and Does It Work?

Introduction

Artificial intelligence has become the invisible force shaping our daily lives. It decides which job candidates get interviews, determines loan approvals, powers facial recognition systems at borders, and even influences medical diagnoses. While AI promises tremendous benefits, its unchecked use has sparked legitimate fears: algorithmic discrimination denying opportunities based on race or gender, manipulative systems exploiting human vulnerabilities, opaque decision-making affecting lives without explanation, and surveillance technologies threatening privacy and freedom.

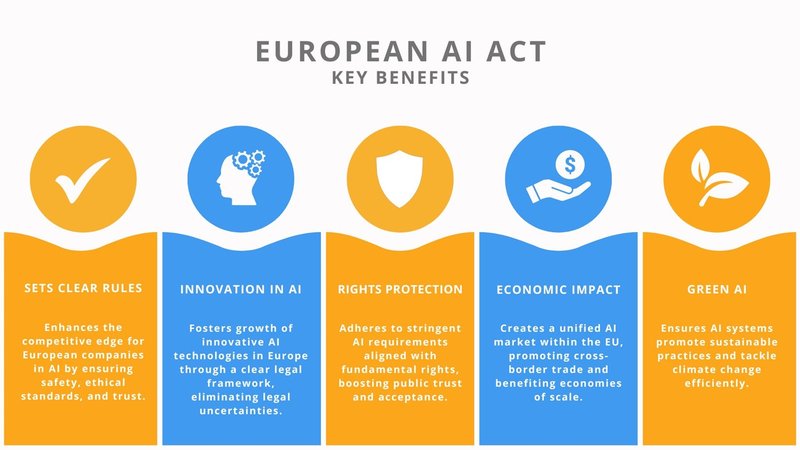

The question isn't whether AI should be regulated; it's how to do it effectively without crushing innovation. The European Union has answered this challenge with the EU AI Act, the world's first comprehensive legal framework specifically designed to govern artificial intelligence. Officially adopted in March 2024 and beginning its enforcement phases throughout 2025 and 2026, this landmark legislation establishes clear boundaries for AI development and deployment across all 27 EU member states.

The EU AI Act is a carefully crafted system that distinguishes between AI applications based on their potential to cause harm. Some AI practices are banned outright, while others face stringent oversight. Many AI systems continue operating with minimal restrictions, ensuring innovation thrives where risks are low.

So how exactly does the EU AI Act prevent AI's potential dangers while fostering technological progress? This guide breaks down the mechanisms, requirements, and safeguards that make this regulation a blueprint for responsible AI governance worldwide.

Understanding the EU AI Act: What Makes It Revolutionary?

The EU AI Act takes a risk-based approach to AI regulation, meaning different rules apply depending on how dangerous a particular AI system might be. The EU AI Act establishes a four-tiered risk classification system that determines the level of regulatory scrutiny each AI system receives. These categories are:

Unacceptable Risk (Prohibited AI Systems) AI practices that contradict EU values are completely banned, including systems that manipulate human behavior through subliminal techniques, exploit vulnerabilities based on age or disability, perform social scoring by governments, or use real-time remote biometric identification in public spaces for law enforcement purposes (with limited exceptions).

High-Risk AI Systems These systems must meet stringent requirements before market deployment, including those used in critical infrastructure, education, employment, essential services, law enforcement, and border management. High-risk AI includes biometric identification systems, credit scoring algorithms, and AI used in medical devices.

Limited-Risk AI Systems These systems primarily face transparency obligations. Users must be informed when they're interacting with AI, such as with chatbots, and deepfakes must be clearly labeled.

Minimal or No Risk The vast majority of AI systems fall into this category, including spam filters and AI-enabled video games. These systems have no mandatory obligations but are encouraged to follow voluntary codes of conduct.

How the EU AI Act Prevents Manipulation and Protects Human Autonomy

One of the most significant dangers of AI is its potential to manipulate human behavior without people's awareness. The EU AI Act tackles this head-on by prohibiting specific manipulative practices.

Banned Manipulative Techniques

The legislation prohibits AI systems that use subliminal components people cannot perceive to materially distort their behavior in ways that cause harm. Imagine an AI-powered advertisement that uses imperceptible audio frequencies or visual cues to influence purchasing decisions; this is now illegal under the EU AI Act.

The Act also bans systems that exploit vulnerabilities. For instance, an AI toy that encourages dangerous behavior in children or an algorithm that exploits elderly users' cognitive decline to sell unnecessary products would be prohibited.

Social Scoring Prohibition

Perhaps most notably, the EU AI Act bans government-run social scoring systems; mechanisms that evaluate or classify people based on their social behavior or personal characteristics, resulting in detrimental treatment. This directly addresses concerns raised by social credit systems implemented elsewhere in the world, ensuring that European citizens cannot be systematically discriminated against based on algorithmic assessments of their social behavior.

Safeguarding Fundamental Rights Through High-Risk AI Regulations

For AI systems classified as high-risk, the EU AI Act establishes comprehensive requirements that create multiple layers of protection against potential dangers.

Mandatory Risk Management Systems

High-risk AI providers must implement continuous risk management processes throughout the system's lifecycle. This is an ongoing obligation to identify, assess, mitigate, and monitor risks that could affect health, safety, or fundamental rights.

Data Governance and Quality Requirements

Poor data quality leads to biased outcomes. The EU AI Act requires that training, validation, and testing datasets be relevant, representative, and free from errors. Providers must examine datasets for potential biases and implement measures to detect and correct them. This requirement directly addresses one of AI's most pressing dangers: perpetuating and amplifying societal discrimination through biased training data.

Technical Documentation and Transparency

High-risk AI systems must come with detailed technical documentation that authorities can review. This documentation includes information about the system's intended purpose, risk management measures, data governance practices, and performance metrics. This transparency requirement makes it possible to hold AI developers accountable and enables regulatory oversight.

Human Oversight Mechanisms

The EU AI Act mandates that high-risk AI systems be designed to allow effective human oversight. This means humans must be able to understand the system's capabilities and limitations, monitor its operation, and intervene when necessary. The legislation recognizes that AI should augment rather than replace human judgment in critical decisions.

Accuracy, Robustness, and Cybersecurity

High-risk systems must achieve appropriate levels of accuracy and be robust against errors and inconsistencies. They must also maintain adequate cybersecurity protection throughout their lifecycle. This requirement prevents both unintentional failures and malicious attacks that could turn AI systems into vectors for harm.

Addressing Bias and Discrimination in AI Systems

Algorithmic discrimination represents one of AI's most insidious dangers; systems that systematically disadvantage certain groups based on protected characteristics like race, gender, age, or disability.

Bias Detection and Mitigation Requirements

The EU AI Act requires providers of high-risk AI systems to examine their training datasets for bias and implement appropriate mitigation measures. This includes ensuring datasets are sufficiently representative of the populations the system will serve.

Prohibition of Biometric Categorization for Sensitive Attributes

The Act specifically prohibits biometric categorization systems that infer sensitive attributes such as race, political opinions, trade union membership, religious beliefs, sex life, or sexual orientation. This prevents AI from being used to classify people based on characteristics that are protected under fundamental rights law.

Performance Monitoring Across Demographics

High-risk AI systems must be designed to enable post-market monitoring of performance across different demographic groups. This ensures that systems don't develop discriminatory patterns after deployment and that problems can be identified and corrected.

Ensuring Accountability Through Conformity Assessments

Before high-risk AI systems can be placed on the EU market, they must undergo conformity assessment procedures; processes that verify compliance with the EU AI Act's requirements.

Third-Party Assessment for Certain Systems

Some high-risk AI systems, particularly those used as safety components in regulated products like medical devices, require assessment by independent third-party bodies called notified bodies. This external verification adds another layer of protection against dangerous AI deployments.

Self-Assessment with Strict Documentation

Other high-risk systems can undergo internal conformity assessment, but with rigorous documentation requirements. Providers must maintain technical documentation proving compliance, and this documentation can be audited by authorities at any time.

CE Marking and Market Surveillance

High-risk AI systems that meet requirements receive CE marking, indicating conformity with EU regulations. After deployment, national market surveillance authorities monitor these systems to ensure continued compliance, creating ongoing accountability mechanisms.

Protecting Against Opaque Decision-Making

AI systems often function as "black boxes," making decisions that significantly impact people's lives without clear explanations. The EU AI Act addresses this transparency deficit in several ways.

Explainability Requirements

High-risk AI systems must be designed to enable users to interpret outputs and use systems appropriately. While the Act doesn't mandate full algorithmic transparency (which can be technically impossible with complex neural networks), it requires sufficient information for humans to understand and trust the system's operation.

Logging Capabilities

High-risk AI systems must automatically log events throughout their lifecycle. These logs enable traceability of the system's functioning, making it possible to investigate problematic outcomes and determine accountability when things go wrong.

Instructions for Use

Providers must furnish detailed instructions that deployers (organizations using the AI) can understand. These instructions explain the system's intended purpose, limitations, performance levels, and human oversight requirements; empowering deployers to use AI responsibly.

General-Purpose AI Models: Addressing Systemic Risks

The EU AI Act recognizes that foundation models and general-purpose AI systems like large language models present unique challenges due to their wide-ranging capabilities and applications.

Transparency Obligations for GPAI Providers

Providers of general-purpose AI models must maintain technical documentation, provide information to downstream deployers, implement policies for copyright compliance, and publish summaries of training data. These requirements help manage risks associated with powerful, multipurpose AI systems.

Additional Requirements for High-Impact GPAI

Models with systemic risk, determined by computational power exceeding specific thresholds or equivalent capabilities face additional obligations. Providers must conduct model evaluations, assess and mitigate systemic risks, implement cybersecurity protections, and report serious incidents. This addresses concerns about the most powerful AI models potentially causing widespread harm.

Governance Structure and Enforcement Mechanisms

Regulations are only as effective as their enforcement. The EU AI Act establishes a comprehensive governance framework to ensure compliance.

The European AI Office

A centralized EU AI Office coordinates implementation and supervises general-purpose AI models. This office provides guidance, facilitates cooperation between member states, and ensures consistent application of rules across the EU.

National Competent Authorities

Each EU member state designates authorities responsible for supervising AI systems in their territory. These national bodies conduct market surveillance, investigate complaints, and can impose sanctions for non-compliance.

The AI Board

An advisory body composed of representatives from member states coordinates regulatory approaches and ensures consistent interpretation of the EU AI Act across the EU. This prevents regulatory fragmentation that could undermine the law's effectiveness.

Significant Penalties for Non-Compliance

The EU AI Act includes substantial fines to deter violations. Companies can face penalties up to €35 million or 7% of global annual turnover for deploying prohibited AI systems, and up to €15 million or 3% of turnover for other violations. These penalties provide real teeth to the regulatory framework.

Innovation-Friendly Measures: AI Regulatory Sandboxes

The EU AI Act balances safety with innovation through regulatory sandboxes, controlled environments where companies can test innovative AI systems under regulatory supervision before full market deployment.

These sandboxes allow developers to experiment with AI technologies while receiving guidance on compliance, identifying risks early, and refining systems before they reach consumers. This approach prevents the regulation from stifling innovation while still protecting against AI dangers.

Real-World Impact: Case Studies of Protection

To understand how the EU AI Act prevents dangers, consider these practical scenarios:

Employment Screening: An AI-powered resume screening system used for hiring qualifies as high-risk under the Act. The company must ensure its training data doesn't contain biased patterns (such as favoring male candidates for technical roles), implement human oversight so recruiters can challenge AI recommendations, and maintain documentation proving the system's fairness across demographic groups.

Healthcare Diagnostics: An AI system that analyzes medical images to detect cancer must undergo rigorous conformity assessment, maintain detailed performance metrics showing accuracy across different patient populations, and enable radiologists to understand why the system flagged particular areas of concern.

Chatbots: While customer service chatbots are typically limited-risk, they must clearly inform users they're interacting with AI rather than a human. This transparency prevents manipulation and ensures informed consent.

Frequently Asked Questions About the EU AI Act

Q1: When does the EU AI Act take full effect?

The EU AI Act entered into force on August 1, 2024, with a phased implementation timeline. Prohibitions on unacceptable-risk AI became applicable on February 2, 2025. Rules for general-purpose AI models apply from August 2, 2025, while most high-risk AI requirements become fully applicable on August 2, 2026. High-risk systems embedded in regulated products have an extended transition until August 2, 2027.

Q2: Does the EU AI Act apply to companies outside Europe?

Yes. Similar to GDPR, the EU AI Act has extraterritorial reach. It applies to providers and deployers of AI systems located outside the EU if their systems are used within the EU market or their outputs are used in the EU. This global reach makes the EU AI Act potentially the de facto global standard for AI regulation.

Q3: What happens if my AI system doesn't fit neatly into one risk category?

The EU AI Act provides classification guidance, but borderline cases can be challenging. Organizations should conduct thorough risk assessments and, when uncertain, consider applying the higher risk classification to ensure comprehensive compliance. The European Commission will also provide guidelines and examples to clarify classification criteria.

Q4: How does the EU AI Act handle rapidly evolving AI technology?

The Act is designed to be technology-neutral and future-proof. The European Commission has the power to update lists of high-risk AI systems and prohibited practices through delegated acts, allowing the regulation to adapt as technology evolves without requiring full legislative revision.

Q5: Can small businesses comply with the EU AI Act, or is it only for large corporations?

While the EU AI Act imposes significant requirements, particularly for high-risk systems, it includes measures to support SMEs, including access to regulatory sandboxes, simplified conformity assessment procedures for certain systems, and guidance from authorities. Most AI systems used by small businesses will fall into minimal or limited-risk categories with manageable compliance obligations.

Q6: What's the difference between the EU AI Act and other AI regulations being discussed globally?

The EU AI Act is the world's first comprehensive, legally binding AI regulation. While other jurisdictions like the US, UK, and China are developing AI governance frameworks, the EU AI Act is currently the most detailed and enforceable AI law globally. Its risk-based approach and extraterritorial scope make it a landmark piece of legislation.

Q7: Does the EU AI Act stifle AI innovation?

The Act is designed to foster trustworthy AI while protecting fundamental rights. By focusing stringent requirements on high-risk systems and allowing minimal-risk AI to operate with few obligations, the framework enables innovation in most AI applications. Regulatory sandboxes further support innovation by providing safe testing environments. Many experts argue that clear rules actually encourage innovation by providing legal certainty and building public trust.

Conclusion

The EU AI Act represents a watershed moment in technology regulation; the first comprehensive attempt to govern artificial intelligence through binding legal requirements. By implementing a risk-based framework, the legislation prevents the potential dangers of AI through multiple interconnected mechanisms: outright prohibition of the most harmful practices, stringent requirements for high-risk systems, transparency obligations for limited-risk AI, and a robust governance structure for enforcement.

The Act's approach recognizes that AI is not monolithically dangerous or beneficial, its risks depend on how it's used. By calibrating regulatory requirements to actual risks, the EU AI Act protects fundamental rights and safety without unnecessarily constraining beneficial AI applications.

As AI continues to advance rapidly, the EU AI Act provides a blueprint for balancing innovation with protection. Its extraterritorial reach means its influence will extend far beyond Europe's borders, potentially shaping global AI development practices. Organizations worldwide that deploy AI systems in or for the EU market must understand and comply with these requirements.

The success of the EU AI Act will ultimately depend on effective implementation and enforcement. However, its comprehensive framework; addressing manipulation, bias, transparency, accountability, and systemic risks establishes unprecedented protections against AI's potential dangers while creating space for responsible innovation.

Don't wait until enforcement actions begin. Contact Regulance today to schedule a consultation and ensure your AI systems meet EU AI Act requirements, protecting both your organization and the people your technology serves.